Carol Lefebvre, Julie Glanville, Simon Briscoe, Anne Littlewood, Chris Marshall, Maria-Inti Metzendorf, Anna Noel-Storr, Tamara Rader, Farhad Shokraneh, James Thomas and L. Susan Wieland on behalf of the Cochrane Information Retrieval Methods Group

This technical supplement should be cited as: Lefebvre C, Glanville J, Briscoe S, Littlewood A, Marshall C, Metzendorf M-I, Noel-Storr A, Rader T, Shokraneh F, Thomas J, Wieland LS. Technical Supplement to Chapter 4: Searching for and selecting studies. In: Higgins JPT, Thomas J, Chandler J, Cumpston MS, Li T, Page MJ, Welch VA (eds). Cochrane Handbook for Systematic Reviews of Interventions Version 6.1 (updated September 2020). Cochrane, 2020. Available from: www.training.cochrane.org/handbook.

This technical supplement is also available as a PDF document.

Throughout this technical supplement we refer to the Methodological Expectations of Cochrane Intervention Reviews (MECIR), which are methodological standards to which all Cochrane Protocols, Reviews, and Updates are expected to adhere. More information can be found on these standards at: https://methods.cochrane.org/mecir and, with respect to searching for and selecting studies, in Chapter 4 of the Cochrane Handbook for Systematic Review of Interventions.

1 Sources to search

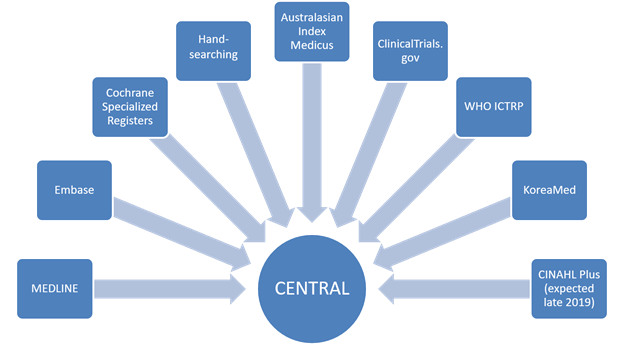

For discussion of CENTRAL, MEDLINE and Embase as the key database sources to search, please refer to Chapter 4, Section 4.3. For discussion of sources other than CENTRAL, MEDLINE and Embase, please see the sections below.

1.1 Bibliographic databases other than CENTRAL, MEDLINE and Embase

1.1.1 The Cochrane Register of Studies

The Cochrane Register of Studies (CRS) is a bespoke Cochrane data repository and data management system, primarily used by Cochrane Information Specialists (CISs). The specialized trials registers maintained by CISs are stored and managed within the CRS. As such, it acts as a ‘meta-register’ of all the trials identified by Cochrane but each Cochrane Group has its own section (segment) within the larger database (Littlewood et al 2017). The Cochrane Central Register of Controlled Trials (CENTRAL) is created within the CRS, drawn partly from the references CISs add to their own segments and partly from references to trial reports sourced from other bibliographic databases (e.g. PubMed and Embase). The CRS is the only route available for publication of records in CENTRAL (Littlewood et al 2017).

As a piece of web-based software, the CRS provides tools to manage search activities both for the Cochrane group’s Specialized Register and for individual Cochrane Reviews. CISs are able to import records from external bibliographic databases and other sources into the CRS, de-duplicate them, share them with author teams and track what has been previously retrieved via searching and screened for each review. A further benefit is that trials register records (currently ClinicalTrials.gov and the WHO International Clinical Trials Registry Platform) are searchable from within the CRS. It is possible to store the full text of each bibliographic citation (and any accompanying documents, such as translations) within the CRS as an attachment but this should always be done in compliance with local copyright and database licensing agreements. Records added to the CRS that will be published in CENTRAL are automatically edited in accordance with the Cochrane HarmoniSR guidance, which ensures consistency in record formatting and output (HarmoniSR Working Group 2015).

The CRS captures links among references, studies and the Cochrane Reviews within which they appear. This information is drawn from CRS-D, a data repository which sits behind the CRS and includes all CENTRAL records, all included and excluded studies together with ongoing studies, studies awaiting classification and other records collected by CISs in their Specialized Registers. CRS-D has been designed to integrate with RevMan and Archie and this linking of data and information back to the reviews will ultimately help review teams find trials more efficiently. For example, CRS-D records can be linked to records in the Reviews Database that powers RevMan Web, so users can access additional data about the studies that appear in reviews, such as the characteristics of studies, ‘Risk of bias’ tables and, where possible, the extracted data from the study.

The CRS is a mixture of public records, i.e. CENTRAL records and private records for the use of Cochrane editorial staff only. Full access to the content in CRS is available only to designated staff within Cochrane editorial teams. Permission to perform tasks is controlled through Archie, Cochrane’s central server for managing documents and contact details (Littlewood et al 2017).

1.1.2 National and regional databases

In addition to MEDLINE and Embase, which are generally considered to be the key international general healthcare databases, many countries and regions produce bibliographic databases that focus on the literature produced in those regions and which often include journals and other literature not indexed elsewhere, such as African Index Medicus and LILACs (for Latin America and the Caribbean). It is highly desirable, for Cochrane reviews of interventions, that searches be conducted of appropriate national and regional bibliographic databases (MECIR C25). Searching these databases in some cases identifies unique studies that are not available through searching major international databases (Clark et al 1998, Brand-de Heer 2001, Clark and Castro 2001, Clark and Castro 2002, Abhijnhan et al 2007, Almerie et al 2007, Xia et al 2008, Atsawawaranunt et al 2009, Barnabas et al 2009, Manriquez 2009, Waffenschmidt et al 2010, Atsawawaranunt et al 2011, Wu et al 2013, Bonfill et al 2015, Cohen et al 2015, Xue et al 2016). Access to many of these databases is available free of charge. Others are only available by subscription or on a ‘pay-as-you-go’ basis. Indexing complexity and consistency varies, as does the sophistication of the search interfaces.

For a list of general healthcare databases, see Appendix.

1.1.3 Subject-specific databases

It is highly desirable, for authors of Cochrane reviews of interventions, to search appropriate subject specific bibliographic databases (MECIR C25). Which subject-specific databases to search in addition to CENTRAL, MEDLINE and Embase will be influenced by the topic of the review, access to specific databases and budget considerations.

Most of the main subject-specific databases such as AMED (alternative therapies), CINAHL (nursing and allied health) and PsycINFO (psychology and psychiatry) are available only on a subscription or ‘pay-as-you-go’ basis. Access to databases is, therefore, likely to be limited to those databases that are available to the Cochrane Information Specialist at the CRG editorial base or those that are available at the institutions of the review authors. Access arrangements vary according to institution. Review authors should seek advice from their medical / healthcare librarian or information specialist about access at their institution.

Although there is overlap in content coverage across Embase, MEDLINE and CENTRAL and subject-specific databases such as AMED, CINAHL and PsycINFO (Moseley et al 2009), their performance (Watson and Richardson 1999a, Watson and Richardson 1999b) and facilities vary. In addition, a comparison of British Nursing Index and CINAHL shows that even in databases in a specific field such as nursing, each database covers unique journal titles (Briscoe and Cooper 2014). To find qualitative research, CINAHL and PsycINFO should be searched in addition to MEDLINE and Embase (Subirana et al 2005, Wright et al 2015, Rogers et al 2017). Even in cases where research indicates low benefit in searching CINAHL, it is still suggested that for subject-specific reviews it should be considered as an option (Beckles et al 2013).

There are also several studies, each based on a single review, and therefore not necessarily generalizable to all reviews in all topics, showing that searching subject specific databases identified additional relevant publications. It is unclear, however, whether these additional publications would change the conclusions of the review. For example, for a review of exercise therapy for cancer patients, searching CancerLit, CINAHL, and PsycINFO identified additional records which were not retrieved by MEDLINE searches but searching SPORTDiscus identified no additional records (Stevinson and Lawlor 2004); for a review of social interventions, only four of the 69 (less than 6%) relevant studies were found by searching databases such as MEDLINE, while about half of the relevant studies were found by searching the Transport database (Ogilvie et al 2005); in an obesity review, searching the Health Management Information Consortium (HMIC) database identified about one fifth of included publications in addition to MEDLINE searches while CINAHL identified no new publications; and finally, in a tuberculosis review, searching CINAHL identified over 5% of the included publications in addition to MEDLINE, whereas the HMIC database identified no additional publications (Levay et al 2015).

For a list of subject-specific healthcare databases, see Appendix.

1.1.4 Citation indexes

Citation indexes are bibliographic databases which index citations in addition to the standard bibliographic content. They were originally developed to identify efficiently the reference lists of scholarly authors and the number of times a study or author is cited (Garfield 2007). Citation indexes can also be used creatively to identify studies which are similar to a source study, as it is probable that studies which cite or are cited by a source study will contain similar content.

Searching using a citation index is usually called ‘citation searching’ or ‘citation chasing’ and is further defined as ‘forwards citation searching’ or ‘backwards citation searching’ depending on which direction the citations are searched. Forwards citation searching identifies studies which cite a source study and backwards citation searching identifies studies cited by the source study. Citation indexes are mainly used for forwards citation searching, which is practically impossible to conduct manually, whereas backwards citation searching is relatively easy to conduct manually by consulting reference lists of source studies (see Section 1.3.4). Thus the focus in this section is on forwards citation searching. Citation indexes also facilitate author citation searching which is used to identify studies that are carried out by an author and studies that cite an author.

It is good practice to carry out forwards citation searching on studies that meet the eligibility criteria of a systematic review. Thus forwards citation searching usually takes place after the results of the bibliographic database searches have been screened and a set of potentially includable studies has been identified. Because citation searching is not based on pre-specified terminology it has the potential to retrieve studies that are not retrieved by the keyword-based search strategies that are conducted in bibliographic databases and other resources. This makes citation searching particularly effective in systematic reviews where the search terms are difficult to define, usefully extending to iterative citation searching of citations identified by citation searching (also known as ‘snowballing’) in some reported cases (Booth 2001, Greenhalgh and Peacock 2005, Papaioannou et al 2010, Linder et al 2015). Since researchers may selectively cite studies with positive results, forwards citation searching should be used with caution as an adjunct to other search methods in Cochrane Reviews.

There are varied findings on the efficiency of forwards citation searching, measured as the labour required to export and screen the results of searches relative to the number of unique studies identified (Wright et al 2014, Hinde and Spackman 2015, Levay et al 2016, Cooper et al 2017a). Most studies, however, which compared the results of forwards citation searching with other search methods found that citation searching identified one or more unique studies which were relevant to the review question (Greenhalgh and Peacock 2005, Papaioannou et al 2010, Wright et al 2014, Hinde and Spackman 2015, Linder et al 2015). Reviews of recently published studies, such as review updates, are less likely to benefit from forwards citation searching than reviews with no historical date limit for includable studies due to the relatively limited time for recent studies to be cited. When conducting a review update, however, searchers should consider carrying out forwards citation searching on the studies included in the original review and on the original review itself.

The two main subscription citation indexes are Web of Science, which was launched in 1964 and is currently provided by Clarivate Analytics, and Scopus, which was launched in 2004 by Elsevier. Google Scholar, which was also launched in 2004, can be used for forwards but not backwards citation searching. Microsoft Academic was relaunched in 2015 (Sinha et al 2015). It can be used for both forwards and backwards citation searching. A summary of each resource is provided below. There are published comparative studies which can be consulted for a more detailed analysis (Kulkarni et al 2009, Wright et al 2014, Levay et al 2016, Cooper et al 2017b).

Web of Science

Web of Science (formerly Web of Knowledge), produced by Clarivate Analytics, comprises several databases. The ‘Core Collection’ databases cover the sciences (1900 to date), social sciences (1956 to date), and arts and humanities (1975 to date). The sciences and social sciences collections are divided into journal articles and conference proceedings, which can be searched separately. In total, the Web of Science Core Collection contains over 74 million records from more than 21,100 journal titles, books and conference proceedings (Web of Science 2019). Additional databases are available via the Web of Science platform, also on a subscription basis. Author citation searching is possible in Web of Science but it does not automatically distinguish between authors with the same name unless they have registered for a uniquely assigned Web of Science ResearcherID.

https://clarivate.com/products/web-of-science/

Scopus

Scopus, produced by Elsevier, covers health sciences, life sciences, physical sciences and social sciences. As of March 2019, it contains approximately 69 million records from 21,500 journal titles and 88,800 conferences proceedings dating back to 1823 (Scopus 2017). Citation details are mainly available from 1996 to date, though Scopus is in the process of adding details of pre-1996 citations and is expanding the total number of pre-1996 records (Beatty 2015). A unique identification number is automatically assigned to each author in the database which enables it to distinguish between authors with the same names when author citation searching. Errors are still possible, however, as publications are not always assigned correctly to author ID numbers and authors are sometimes erroneously assigned more than one ID number.

https://www.elsevier.com/solutions/scopus

Google Scholar

Google Scholar is a freely available scholarly search engine which uses automated web crawlers to identify and index scholarly references, including published studies and grey literature. Although it can only be used for forwards citation searching, this limitation has little practical significance as backwards citation searching can be easily conducted manually by checking reference lists. The precise number of journals indexed by Google Scholar is not known because it does not use a pre-specified list of journals to populate its content. There is, however, evidence that it has sufficient citation coverage to be used as an alternative to Web of Science or Scopus, if these databases are not available (Wright et al 2014, Levay et al 2016).

A disadvantage of Google Scholar’s automated study identification method is that it produces more duplicate citations than Web of Science, which indexes pre-specified journal content (Haddaway et al 2015). Scopus, which uses a similar indexing method to Web of Science, is also likely to produce fewer duplicates than Google Scholar. A further disadvantage of Google Scholar is that the export features are basic and inefficient and are only marginally improved by linking to its preferred reference manager software, Zotero (Bramer et al 2013, Levay et al 2016). Google Scholar citations can also be exported to the Publish or Perish software (Harzing 2007). Finally, Google Scholar limits the number of viewable results to 1000 and does not disclose how the top 1000 results are selected, thus compromising the transparency and reproducibility of search results (Levay et al 2016).

Microsoft Academic

Microsoft Academic is a relatively new scholarly search engine, with many similarities to Google Scholar. It is free to access, and identifies its source material from the ‘Bing’ web crawler, and so contains both journal articles and reports of research that are not indexed in mainstream bibliographic databases. Like Google Scholar, it is made up of a ‘graph’ of publications that are connected to one another by citation, author, and institutional relationships. Unlike Google Scholar, it provides for both forwards and backwards citation searching, and also contains a ‘related’ documents feature, which identifies documents which its algorithm considers to be closely related to one another. As well as being available through its website, Microsoft Academic also publishes an Application Programming Interface (API) - for other software applications to ‘plug’ into - and it is possible to obtain copies of the entire dataset on request. The API and raw data are probably of greater interest to tool developers than information specialists (though there are some tools in R that provide access to the API), but the greater openness of this dataset compared with Google Scholar may result in the development of a number of useful applications for systematic review authors over time.

https://academic.microsoft.com/

Web of Science, Scopus, Google Scholar and Microsoft Academic all provide wide coverage of healthcare journal publications. There are, however, differences in the number of records indexed in each citation index and in the methods used to index records, and there is evidence that these differences affect the number of citations which are identified when citation searching (Kulkarni et al 2009, Wright et al 2014, Rogers et al 2016). It is not a requirement for Cochrane Reviews, however, to conduct exhaustive citation searching using multiple citation indexes. Review authors and information specialists should consider the time and resources available and the likelihood of identifying unique studies for the review question, when planning whether and how to conduct forwards citation searching.

Further evidence-based analysis of the value of citation searching for systematic reviews can be found on the regularly updated SuRe Info portal in the section entitled Value of using different search approaches (http://vortal.htai.org/?q=node/993).

1.1.5 Dissertations and theses databases

It is highly desirable, for authors of Cochrane reviews of interventions, to search relevant grey literature sources such as reports, dissertations, theses, databases and databases of conference abstracts (MECIR C28). Dissertations and theses are a subcategory of grey literature, which may report studies of relevance to review authors. Searching for unpublished academic research may be important for countering possible publication bias but it can be time consuming and in some cases yield few included studies (van Driel et al 2009). In some areas of medicine, searching for and retrieving unpublished dissertations has been shown to have a limited influence on the conclusions of a review (Vickers and Smith 2000, Royle et al 2005). In other areas of medicine, however, it is essential to broaden the search to include unpublished trials, for example in oncology and in complementary medicine (Egger et al 2003). In a study of 129 systematic reviews from three Cochrane Review Groups (the Acute Respiratory Infections Group, the Infectious Diseases Group and the Developmental, Psychosocial and Learning Problems Group) there was wide variation in the retrieval and inclusion of dissertations (Hartling et al 2017). It is possible that a study which would affect the conclusions would be missed if the search is not comprehensive enough to include searches for unpublished trials including those reported only in dissertation and theses (Egger et al 2003). The failure to search for unpublished trials, such as those in dissertation and theses databases may lead to biased results in some reviews (Ziai et al 2017). Dissertations and theses are not normally indexed in general bibliographic databases such as MEDLINE or Embase, but there are exceptions, such as CINAHL, which indexes nursing, physical therapy and occupational health dissertations and PsycINFO, which indexes dissertations in psychiatry and psychology.

To identify relevant studies published in dissertations or theses it is advisable to search specific dissertation sources:

- The US-based Center for Research Libraries (CRL) is an international consortium of university, college, and independent research libraries (http://catalog.crl.edu/search~S4)

- The LILACS database includes some theses and dissertations from Latin American and Caribbean countries (http://lilacs.bvsalud.org/en/)

- Open Access Theses and Dissertations (OATD) includes electronic theses and databases that are free to access and read online from participating universities from around the world (https://oatd.org/)

- ProQuest Dissertations and Theses Global (PQDT) is the best-known commercial database for searching dissertations. Access to PQDT is by subscription. As at August 2019, ProQuest Dissertations and Theses Global database indexes approximately 5 million doctoral dissertations and Master’s theses from around the world (http://www.proquest.com/products-services/pqdtglobal.html)

Other sources of dissertations and theses include the catalogues and resources produced by national libraries and research centres, for example:

- Australian theses are searchable via the National Library of Australia’s Trove service (http://trove.nla.gov.au/)

- DART-Europe is a partnership of several research libraries and library consortia which provides global access to European research theses via a portal. A list of institutions, national libraries and consortia who contribute to the portal can be found here: (http://www.dart-europe.eu/basic-search.php)

- Deutsche Nationalbibliothek (German National Library) provides access to electronic versions of theses and dissertations since 1998 (https://www.dnb.de/dissonline)

-

The Networked Digital Library of Theses and Dissertations (NDLTD) is an international organization dedicated to promoting the adoption, creation, use, dissemination, and preservation of electronic theses and dissertations.

(http://search.ndltd.org/) - Swedish University Dissertations offers dissertations in English, some of which are available to download (http://www.dissertations.se/)

- Theses Canada provides access to the National Library of Canada’s records of PhD and Master’s theses from Canadian universities (www.collectionscanada.gc.ca/thesescanada/)

Other countries also offer access to dissertations and theses in their national languages.

Whenever possible, review authors should attempt to include all relevant studies of acceptable quality, irrespective of the type of publication, since the inclusion of these may have an impact in situations where there are few relevant studies, or where there may be vested interests in the published literature (Hartling et al 2017). The inclusion of unpublished trials will increase precision, generalizability and applicability of findings (Egger et al 2003). In the interest of feasibility, review authors should assess their research questions and topic area, and seek advice from content experts when selecting dissertation and theses databases to search. Review authors should consult their Cochrane Information Specialist, local library or university for information about dissertations and theses databases in their country or region.

1.1.6 Grey literature databases

As stated above, it is highly desirable, for authors of Cochrane reviews of interventions, to search relevant grey literature sources such as reports, dissertations, theses, databases and databases of conference abstracts (MECIR C28).

Grey literature was defined at GL3, the Third International Conference on Grey Literature on 13 November 1997 in Luxembourg as “that which is produced on all levels of government, academics, business and industry in print and electronic formats, but which is not controlled by commercial publishers” (Farace and Frantzen 1997). On 6 December 2004, at GL6, the Sixth Conference in New York City, a clarification was added: grey literature is “... not controlled by commercial publishers, i.e. where publishing is not the primary activity of the producing body …” (Farace and Frantzen 2005). In a 2017 audit of 203 systematic reviews published in high-impact general medical journals in 2013, 64% described an attempt to search for unpublished studies. The audit showed that reviews published in the Cochrane Database of Systematic Reviews were significantly more likely to include a search for grey literature than those published in standard journals (Ziai et al 2017). A Cochrane Methodology Review indicated that published trials showed an overall greater treatment effect than grey literature trials (Hopewell et al 2007a). Although failure to identify trials reported in conference proceedings and other grey literature might affect the results of a systematic review (Hopewell et al 2007a), a recent systematic review showed that this was only the case in a minority of reviews (Schmucker et al 2017). Since the impact of excluding unpublished data is unclear, review authors should consider the time and effort spent when planning the grey-literature portion of the search.

Grey literature’s diverse formats and audiences can present a significant challenge in a systematic search for evidence. Locating grey literature can often be challenging, requiring librarians to use several databases from various host providers or websites, some of which they may not be familiar with (Saleh et al 2014, Haddaway and Bayliss 2015). There are many characteristics of grey literature that make it difficult to search systematically. Further, there is no ‘gold standard’ for rigorous systematic grey literature search methods and few resources on how to conduct this type of search (Godin et al 2015, Paez 2017). One challenge of searching the grey literature is managing an abundance of material. Often, there are many sources to search but some authors of very broad or cross-disciplinary topics may find it necessary to impose some limits on the extent of their grey literature searching by considering what is feasible within limited time and resources (Mahood et al 2014). For example, since nearly half of the citations found in reviews of new and emerging non-drug technologies are grey literature, searchers should consider focusing their efforts on search engines and aggregator sites to increase feasibility (Farrah and Mierzwinski-Urban 2019). Google Scholar can help locate a large volume of grey literature and specific, known studies, however, it should not be used as the only resource for systematic review searches (Haddaway et al 2015). The types of grey literature that are useful in specific reviews may depend on the research question and researchers may decide to tailor the search to the question (Levay et al 2015). For example, unpublished academic research may be important for countering possible publication bias and can be targeted via specific repositories for preprints, theses and funding registries. Alternatively, if the research question is related to implementation or if the researchers are interested in material to support their implications for practice section, then organizational reports, government documents and monitoring and evaluation reports, might be important for ensuring the search is extensive and fit for purpose (Haddaway and Bayliss 2015).

Careful documentation throughout the search process will demonstrate that efforts have been made to be comprehensive and will help in making the grey literature searching as reproducible as possible (Stansfield et al 2016).

The following resources can help authors plan a manageable and thorough approach to searching the grey literature for their topic.

- The Canadian Agency for Drugs and Technologies in Health (CADTH) publishes a resource entitled ‘Grey Matters: a practical tool for searching health-related grey literature’ (https://www.cadth.ca/resources/finding-evidence/grey-matters) which lists a considerable number of grey literature sources together with annotations about their content as well as search hints and tips.

- GreySource (http://greynet.org/greysourceindex.html) provides links to self-described sources of grey literature. Only web-based resources that explicitly refer to the term grey literature (or its equivalent in any language) are listed. The links are categorized by subject, so that authors can quickly identify relevant sources to pursue.

- The Health Management Information Consortium (HMIC) Database (https://www.kingsfund.org.uk/consultancy-support/library-services) contains records from the Library and Information Services department of the UK Department of Health and the King’s Fund Information and Library Service. It includes all UK Department of Health publications including circulars and press releases. The King’s Fund is an independent health charity that works to develop and improve management of health and social care services. The database is considered to be a good source of grey literature on topics such as health and community care management, organizational development, inequalities in health, user involvement, and race and health.

- The US National Technical Information Service (NTIS; www.ntis.gov) provides access to the results of both US and non-US government-sponsored research and can provide the full text of the technical report for most of the results retrieved. NTIS is free of charge on the internet and goes back to 1964.

- OpenGrey (www.opengrey.eu) is a multidisciplinary European grey literature database, covering science, technology, biomedical science, economics, social science and humanities. Each record has an English title and / or English keywords. Some records include an English abstract (starting in 1997). The database includes technical or research reports, doctoral dissertations, conference presentations, official publications, and other types of grey literature. Information is also provided regarding how to access the documents included in the database.

- PsycEXTRA (http://www.apa.org/pubs/databases/psycextra/) is a companion database to PsycINFO in psychology, behavioural science and health. It includes references from newsletters, magazines, newspapers, technical and annual reports, government reports and consumer brochures. PsycEXTRA is different from PsycINFO (https://www.apa.org/pubs/databases/psycinfo/index) in its format, because it includes abstracts and citations plus full text for a major portion of the records. There is no coverage overlap between PsycEXTRA and PsycINFO.

Conference abstracts are a particularly important source of grey literature and are further covered in Section 1.3.3.

1.2 Ongoing studies and unpublished data sources: further considerations

This section should be read in conjunction with Chapter 4, Sections 4.3.2, 4.3.3, and 4.3.4.

1.2.1 Trials registers and trials results registers

It is mandatory, for authors of Cochrane reviews of interventions, to search trials registers and repositories of results, where relevant to the topic, through ClinicalTrials.gov, the WHO International Clinical Trials Registry Platform (ICTRP) portal and other sources as appropriate (MECIR C27) (see Chapter 4, Section 4.3.3). Although ClinicalTrials.gov is included as one of the registers within the WHO ICTRP portal, it is recommended that both ClinicalTrials.gov and the ICTRP portal are searched separately, from within their own interfaces, due to additional features in ClinicalTrials.gov (Glanville et al 2014)(see below).

Several initiatives have led to the development of and recommendations to search trials registers. The International Committee of Medical Journal Editors (ICMJE) requires prospective registration of studies for subsequent publication in their journals, and there is a legal requirement that the results of certain studies must be posted within a given timeframe. Several studies have shown, however, that adherence to these requirements is mixed (Gill 2012, Huser and Cimino 2013b, Huser and Cimino 2013a, Jones et al 2013, Anderson et al 2015, Dal-Re et al 2016, Goldacre et al 2018, Jorgensen et al 2018) and that results posted on ClinicalTrials.gov show discordance when compared with results published in journal articles (Gandhi et al 2011, Earley et al 2013, Hannink et al 2013, Becker et al 2014, Hartung et al 2014, De Oliveira et al 2015) or both of the above (Jones and Platts-Mills 2012, Adam et al 2018).

ClinicalTrials.gov

In February 2000, the US National Library of Medicine (NLM) launched ClinicalTrials.gov (https://clinicaltrials.gov/ct2/home). ClinicalTrials.gov was created as a result of the Food and Drug Administration Modernization Act of 1997 (FDAMA). FDAMA required the U.S. Department of Health and Human Services, through the US National Institutes of Health (NIH), to establish a registry of clinical trials information for both (US) federally and privately funded trials conducted under ‘investigational new drug’ applications to test the effectiveness of experimental drugs for “serious or life-threatening diseases or conditions”. The ClinicalTrials.gov registration requirements were expanded after the US Congress passed the FDA Amendments Act of 2007 (FDAAA). Section 801 of FDAAA (FDAAA 801) required more types of trials to be registered and additional trial registration information to be submitted. The law also required the submission of results for certain trials. This led to the expansion of ClinicalTrials.gov to include information on study participants and a summary of study outcomes, including adverse events. Results have been made available since September 2008. Further legislation has expanded the coverage of results in ClinicalTrials.gov, which now serves as a major international register including clinical trials conducted across over 200 countries. Searches of ClinicalTrials.gov can be limited to studies which include results by selecting ‘Studies With Results’ from the pull-down menu at the ‘Study Results’ option on the Advanced Search page (https://clinicaltrials.gov/ct2/search/advanced). Research has shown that the most reliable way of searching ClinicalTrials.gov is to conduct a highly sensitive ‘single concept’ search in the basic interface of ClinicalTrials.gov (Glanville et al 2014). This study also suggested that use of the advanced interface seemed to improve precision without loss of sensitivity and this interface might be preferred when large numbers of search results are anticipated.

Search help for ClinicalTrials.gov is available from the following links:

How to Use Basic Search

https://clinicaltrials.gov/ct2/help/how-find/basic

How to Use Advanced Search

https://clinicaltrials.gov/ct2/help/how-find/advanced

How to Read a Study Record

https://clinicaltrials.gov/ct2/help/how-read-study

How to Use Search Results

https://clinicaltrials.gov/ct2/help/how-use-search-results

The World Health Organization International Clinical Trials Registry Platform search portal (WHO ICTRP)

In May 2007, the World Health Organization (WHO) launched the International Clinical Trials Registry Platform (ICTRP) search portal (http://apps.who.int/trialsearch/), to search across a range of trials registers, similar to the initiative launched some years earlier by Current Controlled Trials with their ‘metaRegister’ (which has ceased publication). Currently (August 2019), the WHO portal searches across 17 registers (including ClinicalTrials.gov but note the guidance above regarding searching ClinicalTrials.gov separately through the ClinicalTrials.gov interface). Research has shown that the most reliable way of searching the ICTRP is to conduct a highly sensitive ‘single concept’ search in the ICTRP basic interface (Glanville et al 2014). This study suggested that use of the ICTRP advanced interface might be problematic because of reductions in sensitivity.

Search help for the ICTRP is available from the following link:

http://apps.who.int/trialsearch/tips.aspx

Other trials registers

HSRProj (Health Services Research Projects in Progress) (https://hsrproject.nlm.nih.gov/) provides information about ongoing health services research and public health projects. It contains descriptions of research in progress funded by US federal and private grants and contracts for use by policy makers, managers, clinicians and other decision makers. It provides access to information about health services research in progress before results are available in a published form.

Many countries and regions maintain trials results registers. There are also many condition-specific trials registers, especially in the field of cancer, which are too numerous to list. Some pharmaceutical companies make available information about their clinical trials through their own websites, either instead of or in addition to the information they make available through national or international websites.

In addition, Clinical Trial Results (www.clinicaltrialresults.org) is a website that hosts slide and video presentations from clinical trialists, especially in the field of cardiology but also other specialties, reporting the results of clinical trials.

Further listings of international, national, regional, subject-specific and industry trials registers, together with guidance on how to search them can be found on a website developed in 2009 by two of the co-authors of this chapter (JG and CL) entitled Finding clinical trials, research registers and research results (https://sites.google.com/a/york.ac.uk/yhectrialsregisters/).

1.2.2 Regulatory agency sources and clinical study reports

The EU Clinical Trials Register (EUCTR)

The EUCTR contains protocol and results information for interventional clinical trials on medicines conducted in the European Union (EU) and the European Economic Area (EEA) which started after 1 May 2004. It enables searching for information in the EudraCT database, which is used by national medicines regulators for data related to clinical trial protocols. Results data are extracted from data entered by the sponsors into EudraCT. The EUCTR has been a ‘primary registry’ in the ICTRP since September 2011 but in the absence of any evidence to the contrary, it is recommended that searches of the EUCTR should be carried out within the EUCTR and not solely within the ICTRP (in line with the advice above regarding searching ClinicalTrials.gov). The register currently (August 2019) contains information about approximately 60,000 clinical trials. Searches can be limited to ‘Trials with results’ under the ‘Results status’ option and up to 50 records can be downloaded at a time.

https://www.clinicaltrialsregister.eu/ctr-search/search

Drugs@FDA, OpenTrialsFDA Prototype and medical devices

Drugs@FDA is hosted by the US Food and Drug Administration and provides information about most of the drugs approved in the US since 1939. For those approved more recently (from 1998), there is often a ‘Review’, which contains the scientific analyses that provided the basis for approval of the new drug. In 2012, new search options were introduced, enabling search strategies to be saved and re-run and results to be downloaded to a spreadsheet (Goldacre et al 2017).

(http://www.accessdata.fda.gov/scripts/cder/daf/)

The OpenTrialsFDA Prototype initiative makes data from FDA documents (Drug Approval Packages) more easily accessible and searchable, links the data to other clinical trial data and presents the data through a new user-friendly web interface

(https://opentrials.net/opentrialsfda/)

The FDA also makes information about devices, including several medical device databases (including the Post-Approval Studies (PAS) Database and a database of Premarket Approvals (PMA)), available on its website at:

Clinical study reports

Clinical study reports (CSRs) are reports of clinical trials, which provide detailed information on the methods and results of clinical trials submitted in support of marketing authorization applications. Cochrane recently funded a project under the Methods Innovation Funding programme to draft interim guidance to help Cochrane review authors decide whether to include data from clinical study reports (CSRs) and other regulatory documents in a Cochrane Review.

http://methods.cochrane.org/methods-innovation-fund-2. (Hodkinson et al 2018, Jefferson et al 2018)

A Clinical Study Reports Working Group has been established in Cochrane to take this work forward and to consider how CSRs might be used in Cochrane Reviews in future. To date, only one Cochrane Review is based solely on CSRs, that is the 2014 review update on neuraminidase inhibitors for preventing and treating influenza in healthy adults and children (Jefferson et al 2014).

In late 2010, the European Medicines Agency (EMA) began releasing CSRs (on request) under their Policy 0043. In October 2016, they began to release CSRs under their Policy 0070. The policy applies only to documents received since 1 January 2015. CSRs are available for approximately 150 products (as at September 2019) (https://clinicaldata.ema.europa.eu/web/cdp/background).

In order to download the full CSR documents, it is necessary to register for use “for academic and other non-commercial research purposes” and to provide an email address and a place of address in the European Union, or provide details of a third party, resident or domiciled in the European Union, who will be considered to be the user.

https://clinicaldata.ema.europa.eu/web/cdp/termsofuse

The FDA does not currently routinely provide access to CSRs, only their own internal reviews, as noted above. In January 2018, however, they announced a voluntary pilot programme to disclose up to nine recently approved drug applications, limited to CSRs for the key ‘pivotal’ trials that underpin drug approval (Doshi 2018). A public consultation of this pilot project (which included only one CSR) was undertaken in August 2019.

The Japanese Pharmaceuticals and Medical Devices Agency (PMDA) also provides access to its own internal reviews of approved drugs and medical devices but not the original CSRs. These can be found in the Reviews section of its website at:

https://www.pmda.go.jp/english/review-services/reviews/0001.html

https://www.pmda.go.jp/english/review-services/reviews/approved-information/drugs/0001.html

In April 2019 Health Canada announced that it was starting to make clinical information about drugs and devices publicly available on its website (https://clinical-information.canada.ca/search/ci-rc) (Lexchin et al 2019). As at August 2019, information was available for 10 drug records and three medical device records.

1.3 Journals and other non-bibliographic database sources

1.3.1 Handsearching

Handsearching involves a manual page-by-page examination of the entire contents of a journal issue or conference proceedings to identify all eligible reports of trials. (For discussion of ‘handsearching’ full-text journals available electronically, see Section 1.3.2) In journals, reports of trials may appear in articles, abstracts, news columns, editorials, letters or other text. Handsearching healthcare journals and conference proceedings can be a useful adjunct to searching electronic databases for at least two reasons: 1) not all trial reports are included in electronic bibliographic databases, and 2) even when they are included, they may not contain relevant search terms in the titles or abstracts or be indexed with terms that allow them to be easily identified as trials (Dickersin et al 1994). It should be noted, however, that handsearching is not a requirement for all Cochrane Reviews and review authors should seek advice from their Cochrane Information Specialist or their medical / healthcare librarian or information specialist with respect to whether handsearching might be valuable for their review, and if so, what to search and how (Littlewood et al 2017). Each journal year or conference proceeding that is to be handsearched should be searched thoroughly and competently by a well-trained handsearcher, ideally for all reports of trials, irrespective of topic, so that once it has been handsearched it will not need to be searched again. A Cochrane Methodology Review found that a combination of handsearching and electronic searching is necessary for full identification of relevant reports published in journals, even for those that are indexed in MEDLINE (Hopewell et al 2007b). This was especially the case for articles published before 1991 when there was no indexing term for randomized trials in MEDLINE and for those articles that are in parts of journals (such as supplements and conference abstracts) which are not routinely indexed in databases such as MEDLINE. Richards’ review (Richards 2008) found that handsearching was valuable for finding trials reported in abstracts or letters, or in languages other than English. We note that Embase is now a good source of conference abstracts.

To facilitate the identification of all published trials, Cochrane has organized extensive handsearching efforts. Over 3000 journals have been, or are being, searched within Cochrane. The list of journals that have already been handsearched, with the dates of the search and whether the search has been completed is available via the Handsearched Journals tab in the Cochrane Register of Studies Online at crso.cochrane.org, (Cochrane Account login required). Cochrane Information Specialists can edit records of journals that are being handsearched and can add new handsearch records to the Register (Littlewood et al 2017). Since many conference proceedings are now included within Embase, the information specialist will also check coverage of specific conferences of interest by checking the Embase list of conferences (https://www.elsevier.com/solutions/embase-biomedical-research/embase-coverage-and-content). Handsearching should still be considered, however, since searches of Embase will not necessarily find all the trials records in a conference issue (Stovold and Hansen 2011).

Cochrane groups and authors can prioritize handsearching based on where they expect to identify the most trial reports. This prioritization can be informed by searching CENTRAL, MEDLINE and Embase in a topic area and identifying which journals appear to be associated with the most retrieved citations. Preliminary evidence suggests that most of the journals with a high yield of trial reports are indexed in MEDLINE (Dickersin et al 2002) but this may reflect the fact that Cochrane contributors have concentrated early efforts on searching these journals. Therefore, journals not indexed in MEDLINE or Embase should also be considered for handsearching. Research into handsearching journals in a range of languages suggests that handsearching journals published in languages other than English is still helpful for identifying trials which have not been retrieved by database searches (Blumle and Antes 2005, Fedorowicz et al 2005, Al-Hajeri et al 2006, Nasser and Al Hajeri 2006, Chibuzor and Meremikwu 2009). The value of handsearching may vary from topic to topic. In physical therapy and respiratory disease, recent studies have found handsearching yielded additional studies (Stovold and Hansen 2011, Craane et al 2012). Identifying studies of handsearching in specific disease areas may help to inform decisions around handsearching.

The Cochrane Training Manual for Handsearchers is available on the Cochrane Information Retrieval Methods Group Website: http://methods.cochrane.org/irmg/resources.

1.3.2 Full text journals available electronically

The full text of many journals is available electronically on the internet. Access may be partially or wholly on a subscription basis or free of charge. In addition to providing a convenient method for retrieving the full article of already identified records, full-text journals can also be searched electronically, depending on the search interface, by entering relevant keywords in a similar way to searching for records in a bibliographic database. Electronic journals can also be ‘handsearched’ in a similar manner to that advocated for journals in print form, in that each screen or ‘page’ can be checked for possibly relevant studies in the same way as handsearching a print journal (see Section 1.3.1). When reporting handsearching, it is important to specify whether the full text of a journal has been searched electronically or using the print version. Some journals omit sections of the print version, for example letters, from the electronic version and some include supplementary information such as extra articles in the electronic format only.

Most academic institutions subscribe to a wide range of electronic journals and these are therefore available free of charge at the point of use to members of those institutions. Review authors should seek advice about electronic journal access from the library service at their institution. Some professional organizations provide access to a range of journals as part of their membership package. In some countries similar arrangements exist for health service employees through national licences.

Several international initiatives provide free or low-cost online access to full-text journals (and databases). The Health InterNetwork Access to Research Initiative (HINARI) provides access to approximately 15,000 journals (and up to 60,000 e-books), in 30 different languages, to health institutions in more than 120 low and middle income countries, areas and territories (World Health Organization 2019). Other initiatives include the International Network for the Availability of Scientific Publications (INASP) and Electronic Information for Libraries (EIFL).

A local electronic or print copy of any possibly relevant article found electronically in a subscription journal should be taken and filed (within copyright legislation), as the subscription to that journal may cease. The same applies to electronic journals available free of charge, as the circumstances around availability of specific journals might change. We have not been able to identify any research evidence regarding searching full-text journals available electronically. Authors are not routinely expected to search full-text journals available electronically for their reviews, but they should discuss with their Cochrane Information Specialist whether, in their particular case, this might be beneficial.

1.3.3 Conference abstracts and proceedings

It is highly desirable, for authors of all Cochrane reviews of interventions, to search relevant databases of conference abstracts (MECIR C28). Although conference proceedings are not indexed in MEDLINE, about 2.5 million conference abstracts from about 7,000 conferences (as at August 2019) are now indexed in Embase.

Elsevier provides a list of conferences it indexes in Embase, as mentioned above: (https://www.elsevier.com/solutions/embase-biomedical-research/embase-coverage-and-content). As a result of Cochrane’s Embase project (see Section 2.1.2), conference abstracts that are indexed in Embase and are reports of RCTs are now being included in CENTRAL. Other resources such as the Web of Science Conference Proceedings Citation Indexes also include conference abstracts. A Cochrane Methodology Review found that trials with positive results tended to be published in approximately 4 to 5 years whereas trials with null or negative results were published after about 6 to 8 years (Hopewell et al 2007c) and not all conference presentations are published or indexed (Slobogean et al 2009). Over one-half of trials reported in conference abstracts never reach full publication (Diezel et al 1999, Scherer et al 2018) and those that are eventually published in full have been shown to have results that are systematically different from those that are never published in full (Scherer et al 2018). In addition, conference abstracts / proceedings are a good source to track disagreements between the original abstract and the full report of studies (Chokkalingam et al 1998, Pitkin et al 1999). Additionally, trials with positive findings are more likely to be published than those which do not have positive findings (Salami and Alkayed 2013). It is, therefore, important to try to identify possibly relevant studies reported in conference abstracts through specialist database sources and by searching those abstracts that are made available on the internet, on CD-ROM / DVD or in print form. Many conference proceedings are published as journal supplements or as proceedings on the website of the conference or the affiliated organization.

1.3.4 Other reviews, guidelines and reference lists as sources of studies

It is highly desirable, for authors of Cochrane reviews of interventions, to search within previous reviews on the same topic (MECIR C29) and it is mandatory, for authors of Cochrane reviews of interventions, to check reference lists of included studies and any relevant systematic reviews identified (MECIR C30). Reviews can provide relevant studies and references, and may also provide information about the search strategy used, which may inform the current review (Hunt and McKibbon 1997, Glanville and Lefebvre 2000). Copies of previously published reviews on, or relevant to, the topic of interest should be obtained and checked for references to the included (and excluded) studies. Various sources for identifying previously published reviews are described below.

As well as the Cochrane Database of Systematic Reviews (CDSR), until recently, the Cochrane Library included the Database of Abstracts of Reviews of Effects (DARE) and the Health Technology Assessment Database (HTA Database), produced by the Centre for Reviews and Dissemination (CRD) at the University of York in the UK. Both databases provide information on published reviews of the effects of health care (Petticrew et al 1999). Searches of MEDLINE, Embase, CINAHL, PsycINFO and PubMed to identify candidate records were continued until the end of 2014 and bibliographic records were published on DARE until 31 March 2015. CRD will maintain secure archive versions of DARE until at least 2021. CRD continued to maintain and add records to the HTA database until 31 March 2018. It is being taken over by The International Network of Agencies for Health Technology Assessment (INAHTA) https://www.crd.york.ac.uk/CRDWeb/. Since 1 April 2015 the NIHR Dissemination Centre at the University of Southampton has had summaries of new research available. Details can be found at http://www.disseminationcentre.nihr.ac.uk/.

KSR Evidence, a subscription database, aims to include all systematic reviews and meta-analyses published since 2015 (https://ksrevidence.com/). KSR Evidence was developed by Kleijnen Systematic Reviews Ltd (KSR) (www.systematic-reviews.com). KSR produces and disseminates systematic reviews, cost-effectiveness analyses and health technology assessments of research evidence in health care. The database also includes an advanced search option, suitable for information specialists.

CRD provides an international register of prospectively registered systematic reviews in health and social care called PROSPERO, which (as at August 2019) contained over 50,000 records (www.crd.york.ac.uk/prospero/) (Page et al 2018). Key features from the review protocol are recorded and maintained as a permanent record. PROSPERO aims to provide a comprehensive listing of systematic reviews registered at inception to help avoid duplication and reduce opportunity for reporting bias by enabling comparison of the completed review with what was planned in the protocol. PROSPERO, therefore, provides access to ongoing reviews as well as completed and / or published reviews.

Epistemonikos is a web-based bibliographic service which provides access to many thousands of systematic reviews, broad syntheses of reviews and structured summaries, and their included primary studies (http://www.epistemonikos.org/en). The aim of Epistemonikos is to provide rapid access to systematic reviews in health. Epistemonikos uses the eligibility criteria specified by the review authors to include primary studies in the database. Records that are classified as systematic reviews within Epistemonikos are now available through the Cochrane Library but are only included in search results for queries entered in the Basic Search box, available from the Cochrane Library header. They are not retrieved when using Advanced Search.

The Systematic Review Data Repository (SRDR) is an open and searchable archive of systematic reviews and their data (http://srdr.ahrq.gov/).

Health Systems Evidence is a repository of evidence syntheses about governance, financial and delivery arrangements within health systems, and about implementation strategies that can support change in health systems. The types of syntheses include evidence briefs for policy, overviews of systematic reviews, systematic reviews, protocols, and registered titles. The audience is policy makers / researchers (https://www.healthsystemsevidence.org).

Specific evidence-based search services such as Turning Research into Practice (TRIP) (https://www.tripdatabase.com/) can also be used to identify reviews and guidelines (Brassey 2007). For the range of systematic review sources searched by TRIP see www.tripdatabase.com/about. Access is offered at two levels: free of charge and subscription.

SUMSearch 2 (http://sumsearch.org/) simultaneously searches for original studies, systematic reviews, and practice guidelines from multiple sources.

MEDLINE, Embase and other bibliographic databases, such as CINAHL (Wright et al 2015), can also be used to identify review articles and guidelines. For the 2019 release of the Medical Subject Headings (MeSH), Systematic Review was introduced as a Publication Type term. NLM announced: “We added the publication type ‘Systematic Review’ retrospectively to appropriate existing MEDLINE citations. With this re-indexing, you can retrieve all MEDLINE citations for systematic reviews and identify systematic reviews with high precision.”

https://www.nlm.nih.gov/pubs/techbull/ma19/brief/ma19_systematic_review.html

Embase has a thesaurus (Emtree) term ‘Systematic Review’, which was introduced in 2003. For records prior to 2003, the Emtree terms ‘review’ or ‘evidence-based medicine’ could be used.

Several filters to identify reviews and overviews of systematic reviews in MEDLINE (Boynton et al 1998, Glanville et al 2001, Montori et al 2005, Wilczynski and Haynes 2009) and Embase have been developed and tested over the years (Wilczynski et al 2007, Lunny et al 2015). Until late 2018, the PubMed Systematic Reviews filter under the Clinical Queries link was very broad in its scope and retrieved many references that were not systematic reviews. The strategy was defined by NLM as follows: “This strategy is intended to retrieve citations identified as systematic reviews, meta-analyses, reviews of clinical trials, evidence-based medicine, consensus development conferences, guidelines, and citations to articles from journals specializing in review studies of value to clinicians. This filter can be used in a search as systematic [sb].” An archived version of this search filter is available from the InterTASC Information Specialists’ Sub-Group’s Search Filter Resource at:

This search filter was replaced by NLM in late 2018 with a much more precise filter and is defined by NLM as follows: “This strategy is intended to retrieve citations to systematic reviews in PubMed and encompasses: citations assigned the ‘Systematic Review’ publication type during MEDLINE indexing; citations that have not yet completed MEDLINE indexing; and non-MEDLINE citations. This filter can be used in a search as systematic [sb].”

Example: exercise hypertension AND systematic [sb]

This filter is also available on the Filters sidebar under ‘Article types’ and on the Clinical Queries screen. The full search filter is available at:

https://www.nlm.nih.gov/bsd/pubmed_subsets/sysreviews_strategy.html

The sensitive Clinical Queries Filters for therapy, diagnosis, prognosis, and aetiology perform well in retrieving not only primary studies but also systematic reviews in PubMed. In a test of the Clinical Queries Filters by the McMaster Health Information Research Unit (HIRU), Wilczynski and colleagues reported that performance could be improved by combining the Clinical Queries Filters with the HIRU systematic review filter using the Boolean operator ‘OR’ (Wilczynski et al 2011). As well as filters for study design, some filters are available for special populations, and these might be combined with systematic review filters (Boluyt et al 2008).

Research has been conducted to help researchers choose the filter appropriate to their needs (Lee et al 2012, Rathbone et al 2016). Filters and current reviews of filter performance can be found on the InterTASC Information Specialists’ Subgroup Search Filter Resource website (https://sites.google.com/a/york.ac.uk/issg-search-filters-resource/filters-to-identify-systematic-reviews) (Glanville et al 2019a). For further information on search filters see Section 3.6 and subsections.

National and regional drug approval and reimbursement agencies may also be useful sources of reviews:

- The Agency for Healthcare Research and Quality (AHRQ) publishes systematic reviews and meta-analyses. Evidence reports, comparative effectiveness reviews, technical briefs, Technology Assessment Program reports, and U.S. Preventive Services Task Force evidence syntheses are available under the Evidence-based Practice Centers (EPC) Program of the Agency for Healthcare Research and Quality. Access to the evidence reports is provided at: http://www.ahrq.gov/research/findings/evidence-based-reports/search.html.

- The Canadian Agency for Drugs and Technologies in Health (CADTH) (www.cadth.ca) is an independent, not-for-profit organization responsible for providing healthcare decision-makers with evidence reports to help make informed decisions about the optimal use of drugs, diagnostic tests, and medical, dental, and surgical devices and procedures. CADTH’s Common Drug Review reports, Pan Canadian Oncology Drug Review reports, Health Technology Assessments, Technology Reviews and Therapeutic Reviews are published in full text on their website and include the full search strategy for the clinical evidence used in that review.

- The National Institute for Health and Care Excellence (NICE) (www.nice.org.uk) publishes guidance that includes recommendations on the use of new and existing medicines and other treatments within the National Health Service (NHS) in England and Wales. These reviews can be about medicines, medical devices, diagnostic tests, surgical procedures, or health promotion activities. Each guidance and appraisal document is based on a review of the evidence and reports the searches used.

Clinical guidelines, based on reviews of evidence, may also provide useful information about the search strategies used in their development: see the Appendix for examples of sources of clinical guidelines. Guidelines can also be identified by searching MEDLINE where guidelines should be indexed under the Publication Type term ‘Practice Guideline’, which was introduced in 1991. Embase has a thesaurus term ‘Practice Guideline’, which was introduced in 1994.

The ECRI Guidelines Trust (https://guidelines.ecri.org/) provides access to a free web-based repository of objective, evidence-based clinical practice guideline content. It includes evidence-based guidance developed by nationally and internationally recognized medical organizations and medical specialty societies. Guidelines are summarized and appraised against the US Institute of Medicine (IOM) Standards for Trustworthiness. The Guidelines Trust provides the following guideline-related content:

- Guideline Briefs: summarizes content providing the key elements of the clinical practice guideline.

- TRUST (Transparency and Rigor Using Standards of Trustworthiness) Scorecards: ratings of how well guidelines fulfil the IOM Standards for Trustworthiness.

The Agency for Healthcare Research and Quality (AHRQ)’s National Guideline Clearinghouse existed as a public resource for summaries of evidence-based clinical practice guidelines but ceased production in July 2018 with the latest guidelines being accepted for inclusion until March 2018. The resource offered systematic comparisons of selected guidelines that addressed similar topic areas. For further information as to whether this resource will be reintroduced see: https://www.ahrq.gov/gam/updates/index.html.

Evidence summaries such as online / electronic textbooks, point-of-care tools and clinical decision support resources are a type of synthesized medical evidence. Examples of these tools include BMJ Clinical Evidence, ClinicalKey, DynaMed Plus and UpToDate in addition to Cochrane’s own point-of-care tool Cochrane Clinical Answers. Although they are designed to be used in clinical practice, they offer evidence for diagnosis and treatment of specific conditions and are regularly updated with links to and reference lists to reports of relevant studies which can help in identifying studies, reviews, and overviews. Most evidence summaries for use in clinical practice are available via subscription to commercial vendors.

As noted above, it is mandatory, for authors of Cochrane reviews of interventions, to check reference lists of included studies and any relevant systematic reviews identified (MECIR C30). Checking reference lists within eligible studies supplements other searching approaches and may reveal new studies, or confirm that the topic has been thoroughly searched (Greenhalgh and Peacock 2005, Horsley et al 2011). Examples of situations where checking reference lists might be particularly beneficial are:

- when the review is of a new technology;

- when there have been innovations to an existing technique or surgical approach;

- where the terminology for a condition or intervention has evolved over time; and

- where the intervention is one which crosses subject disciplines, for example, between health and other fields such as education, psychology or social work. Researchers may use different terminology to describe an intervention depending on their field (O'Mara-Eves et al 2014).

It is not possible to give overall guidance as to which of the above sources should be searched in the case of all reviews to identify other reviews, guidelines and reference lists as sources of studies. This will vary from review to review. Reviews authors should discuss this with their Cochrane Information Specialist or their medical / healthcare librarian or information specialist.

1.3.5 General web searching (including search engines / Google Scholar etc)

Searching the World Wide Web (hereafter, web) involves using resources which are not specifically designed to host and facilitate the identification of studies. This includes general search engines such as Google Search and the websites of organizations that are topically relevant for review topics, such as charities, research funders, manufacturers and medical societies. These resources often have basic search interfaces and host a wide range of content, which poses challenges when conducting systematic searching (Stansfield et al 2016). Despite these challenges web searching has the potential to identify studies that are eligible for inclusion in a review, including ‘unique’ studies that are not identified by other search methods (Eysenbach et al 2001, Ogilvie et al 2005, Stansfield et al 2014, Godin et al 2015, Bramer et al 2017a). It is good practice to carry out web searching for review topics where studies are published in journals that are not indexed in bibliographic databases or where grey literature is an important source of data (Ogilvie et al 2005, Stansfield et al 2014, Godin et al 2015). Grey literature is literature “which is produced on all levels of government, academics, business and industry in print and electronic formats, but which is not controlled by commercial publishers” (see Section 1.1.6) (Farace and Frantzen 1997, Farace and Frantzen 2005).

It is good practice to base the search terms used for web searching on the search terms used for searching bibliographic databases (Eysenbach et al 2001). A simplified approach, however, might be required due to the basic search interfaces of web resources. For example, web resources are unlikely to support multi-line search strategy development or nested use of Boolean operators, and single-line searching is often limited by a maximum number of alphanumeric characters. As such, it might be necessary to rewrite a search using fewer search terms or to conduct several searches of the same resource using different combinations of search terms (Eysenbach et al 2001, Stansfield et al 2016). In addition to using search terms, web searching involves following links to webpages and websites. This is less structured than searching using pre-specified search terms and the searcher will need to use their discretion to decide when to start and stop searching (Stansfield et al 2016). Wherever possible, a similar approach to searching should be used for different web resources to ensure consistency and searches should be documented in full and reported in the review (see Chapter 4, Section 4.5).

Web resources are unlikely to have a function for exporting results to reference management software, in which case the searcher may decide to screen the results ‘on screen’ while searching. Alternatively, screenshots can be taken and screened at a later time (Stansfield et al 2016). This process can be facilitated by software such as Evernote or OneNote. Because website content can be deleted or edited by the website editor at any time, a permanent record of any relevant studies should be retained.

Web searching should use a combination of search engines and websites to ensure a wide range of sources are identified and searched in depth.

Search engines

Due to the scale and diversity of content on the web, searching using a search engine is likely to retrieve an unmanageable number of results (Mahood et al 2014). Results are usually ranked according to relevance as determined by a search engine’s algorithm, so it might be useful to limit the screening process to a pre-specified number of results, e.g. limits ranging from 100 to 500 results have been reported in recent Cochrane Reviews (Briscoe 2018). Alternatively, an ad hoc decision to stop screening can be made when the search results become less relevant (Stansfield et al 2016). It is good practice to use a more comprehensive approach when screening Google Scholar results, which are limited to 1000, to ensure that all relevant studies, including grey literature, are identified (Haddaway et al 2015). Some search engines allow the user to limit searches to a specified domain name or file type, or to web pages where the search terms appear in the title. These options might improve the precision of a search though they might also reduce its sensitivity. The reported number of results identified by a search engine is usually an estimate which varies over time, and the actual number of results might be much lower than reported (Bramer 2016). Search engines often combine search terms using the ‘AND’ Boolean operator by default. Some search engines support additional search operators and features such as ‘OR’, ‘NOT’, wildcards and phrase searching using quotation marks.

There are many freely available search engines, each of which offers a different approach to searching the web. Because each search engine uses a different algorithm to retrieve and rank its results, the results will differ depending on the search engine that is used (Dogpile.com 2007). Some search engines use internet protocol (IP) addresses to tailor the search results to a user’s search history, so the search results might differ between users. For these reasons, it might be worth experimenting with or combining different search engines to retrieve a wider selection of results. There are freely available meta-search engines which search a combination of search engines, though they are often limited with regard to which search engines can be combined.

A selection of freely available search engines and meta-search engines is shown in Box 1.a.These are examples of different types of search engine rather than a list of recommended search engines. No specific search engines are recommended for a Cochrane Review.

Box 1.a Search engines

|

Dogpile http://www.dogpile.com/ Dogpile is a meta-search engine which in a study from 2007 is reported to search Google Search, Yahoo!, Ask and Bing (Dogpile.com 2007). A more up to date list of search engines used by Dogpile has not been identified. DuckDuckGo https://duckduckgo.com/ DuckDuckGo protects the privacy of its users by not recording their IP addresses and search histories. A potential advantage for systematic review authors is that DuckDuckGo does not use search histories to personalize its search results, which might make it better at ranking less frequently visited but useful pages higher in the results. Google Scholar https://scholar.google.com/ Google Scholar is a specialized version of Google Search which limits results to scholarly literature, including published studies and grey literature. It cannot be used instead of searching bibliographic databases due to its basic search interface and a block on viewing more than 1000 records per search (Boeker et al 2013a, Bramer et al 2016a). It can, however, be a useful resource when used alongside bibliographic databases for identifying studies and grey literature not indexed in bibliographic databases or not retrieved by the bibliographic database search strategies (Haddaway et al 2015, Bramer et al 2017a). The option to search the full text of studies can contribute to the identification of unique studies when using similar or the same search terms as used in bibliographic databases (Bramer et al 2017a). References can be exported to reference management software, though the number of references that can be exported at a time is limited to 20 (Bramer et al 2013). Google Search https://www.google.com/ Google Search is the most widely used search engine worldwide. An advantage of its popularity is that there is an abundance of online material on how to make the most of its advanced search features. The Verbatim feature in the Google Search Tools menu can be used to ensure search results contain the precise search terms used (e.g. will not retrieve “nursing” if searching for “nurse”) and to switch off the personalization of search results based on websites which the user has previously visited. Personalization can also be deactivated via the settings menu. Microsoft Academic https://academic.microsoft.com/ Microsoft Academic is a scholarly search engine which, like Google Scholar, indexes scholarly literature. It was relaunched in 2016 after a four year hiatus. Comparative studies of Google Scholar and Microsoft Academic show that Google Scholar indexes more content than Microsoft Academic (Gusenbauer 2019). Microsoft Academic, however, has more structured and richer metadata than Google Scholar, which is reported to facilitate better search functionality and handling of results (Hug et al 2017). |

Not all content on websites is indexed by search engines, so it is important to consider accessing and searching any potentially useful websites which are identified in the results (Devine and Egger-Sider 2013).

Websites

The selection of websites to search will be determined by the review topic. It is good practice to investigate whether the websites of relevant pharmaceutical companies and medical device manufacturers host trials registers which should be searched for studies. The websites of medicines regulatory bodies such as the US Food and Drug Administration (FDA) and the European Medicines Agency (EMA) should be searched for regulatory documentation (see Section 1.2 and subsections). It might also be useful to search the websites of professional societies, national and regional health departments, and health related non-governmental organizations and charities for studies not indexed in bibliographic databases and grey literature (Ogilvie et al 2005, Godin et al 2015).

Searching websites will usually yield a lower number of results than search engines, so it should be possible to screen all the results rather than a pre-specified number.

1.4 Summary points

- Cochrane Review authors should seek advice from their Cochrane Information Specialist on sources to search.

- Authors of non-Cochrane reviews should seek advice from their medical / healthcare librarian or information specialist, with experience of conducting searches for studies for systematic reviews.

- The key database sources which should be searched are the Cochrane Review Group’s Specialized Register (internally, e.g. via the Cochrane Register of Studies, or externally via CENTRAL), CENTRAL, MEDLINE and Embase (if access to Embase is available to either the review authors or the CRG).

- Appropriate national, regional and subject specific bibliographic databases should be searched according to the topic of the review.

- Relevant grey literature sources such as those containing reports, dissertations/theses and conference abstracts should be searched.

- Searches should be conducted to locate previous reviews on the same topic, to identify additional studies included in (and excluded from) those reviews.