James Thomas, Mark Petticrew, Jane Noyes, Jacqueline Chandler, Eva Rehfuess, Peter Tugwell, Vivian A Welch

Key Points:

- We refer to ‘intervention complexity’, rather than ‘complex intervention’, because no intervention is simple, and many review authors will need to consider some aspects of complexity.

- There are three ways of understanding intervention complexity: (i) in terms of the number of components in the intervention; (ii) in terms of interactions between intervention components or interactions between the intervention and its context, or both; and (iii) in terms of the wider system within which the intervention is introduced.

- Of most relevance to Cochrane Review authors are (i) and (ii), and the chapter focuses mainly on these understandings of intervention complexity.

Cite this chapter as: Thomas J, Petticrew M, Noyes J, Chandler J, Rehfuess E, Tugwell P, Welch VA. Chapter 17: Intervention complexity. In: Higgins JPT, Thomas J, Chandler J, Cumpston M, Li T, Page MJ, Welch VA (editors). Cochrane Handbook for Systematic Reviews of Interventions version 6.3 (updated February 2022). Cochrane, 2022. Available from www.training.cochrane.org/handbook.

17.1 Introduction

This chapter introduces how to conceptualize and consider intervention complexity within systematic reviews. Advice available on this subject can appear contradictory and there is a risk that accounting for intervention complexity can make the review itself overly complex and less comprehensible to users. The key issue is how to identify an approach that assists in a specific systematic review. The chapter aims to signpost review authors to advice that helps them make decisions on when and in which circumstances to apply that advice. It does not aim to cover all aspects of complexity but advises review authors on how to frame review questions to address issues of intervention complexity and directs them to other sources for further reference. Other parts of this Handbook have been expanded to support considerations of intervention complexity, and this chapter provides cross-references where appropriate. Most of the methods discussed in this chapter have been thoroughly tested and published elsewhere. Some are still relatively new and under development. These new and emerging methods are flagged as such when they are discussed.

17.1.1 Conceptualizing intervention complexity

The terms ‘simple’ and ‘complex’ interventions are common in many texts addressing intervention complexity. We will refer to intervention complexity specifically because ‘simplicity’ and ‘complexity’ are not physical properties that separate interventions into simple and complex binary categories. Drugs – often characterized as simple – can equally be conceptualized as ‘complex interventions’ if we analyse them in their wider context (e.g. as part of the patient–clinician relationship, or as part of the health or other system through which the drug is provided, or both). Even the apparently simple intervention of taking a drug becomes complex if we consider the pharmacokinetics and pharmacodynamics of the drug within the body. Considering complexity as a multidimensional continuum, where there may be higher or lower levels of complexity across different aspects of the intervention and those involved in delivering or receiving it, can help review authors to decide what aspects of complexity are most important to focus on in their review.

There are three broad ways to think about intervention complexity, which offer alternative perspectives on the intervention and its wider context. The first two perspectives are focused on the intervention in question: (i) on how the intervention itself may be complex; and (ii) on how its implementation in specific situations may result in complex interactions. The third perspective shifts the focus of analysis from an individual intervention to (iii) the wider context within which it is implemented.

In the first, and simplest, understanding of intervention complexity, interventions with more than one component are described as ‘complex’. This is because it can be difficult to understand which components are most important, and which are responsible for intervention effects (if any). Analysis methods are often based on the assumption that multiple components act in an additive way.

The second perspective of intervention complexity focuses on interactions, which may be between components of the intervention, between the intervention and study participants, with the intervention context, or a combination of these aspects. Understanding complexity in these terms has two important implications: (1) considering more complex interactions may require different methods of analysis (e.g. where the dose or intensity of one component needs to reach a given threshold before another is activated); and (2) while the intervention may appear quite ‘simple’ (e.g. in the prescription of a single drug), complexity arises when other issues are considered, such as patient adherence to treatment.

In the third perspective, the analysis can shift focus from the consideration of a specific intervention and outcome(s), towards the wider context (understood as a ‘system’) within which the intervention is introduced. Here the analysis might examine the impact of the intervention on the system, or the effect of the system on the intervention. This approach attempts to address the bi-directional feedback that occurs in systems that can impact on the intervention’s effectiveness by either reducing or enhancing its effect.

This chapter focuses mainly on addressing the first two perspectives of intervention complexity, rather than the systems perspective, because these are most commonly used in Cochrane Reviews. The next section introduces the first two aspects of complexity in more detail, and the following section outlines some implications when the analysis is focused on the wider system.

17.1.2 Perspectives 1 and 2: intervention complexity arising from multiple components and/or interactions inside and outside the intervention

Systematic reviews often adopt an approach whereby effects of interventions, and (combinations of) their components, are seen to be additive (which of course they often are), without fully considering the implications of complexity. These reviews have appraised the primary studies on their ability to isolate components of interventions effectively from their context (see Section 17.2.4). However, intervention components may often have synergistic (as opposed to additive) and dis-synergistic effects, and this is one often-cited characteristic of intervention complexity (Pigott et al 2017).

The UK Medical Research Council has produced guidance which highlights specific difficulties for evaluating “complex” interventions (as defined by the MRC):

There are specific difficulties in defining, developing, documenting, and reproducing complex interventions that are subject to more variation than a drug. A typical example would be the design of a trial to evaluate the benefits of specialist stroke units. Such a trial would have to consider the expertise of various health professionals as well as investigations, drugs, treatment guidelines, and arrangements for discharge and follow up. Stroke units may also vary in terms of organization, management, and skill mix. The active components of the stroke unit may be difficult to specify, making it difficult to replicate the intervention. (Campbell et al 2000)

Further elaboration describes key aspects of intervention complexity (Craig et al 2008, Petticrew et al 2019):

- whether there are multiple components within the experimental and control interventions, and whether they may interact with one another;

- the range of behaviours required by those delivering or receiving the intervention, and how difficult or variable they may be;

- whether the intervention, or its components, result in non-linear effects;

- the number of groups or organizational levels targeted by the intervention;

- the number and variability of outcomes; and

- the degree of flexibility or tailoring of the intervention permitted.

17.1.2.1 Context, implementation and mechanisms of action

Context is usually described as a key concept in the complexity literature, but it is difficult to define in isolation, and is often combined with related issues concerning how interventions are implemented and how they might work. Oxford Dictionaries define ‘context’ as: “the circumstances that form the setting for an event, statement, or idea, and in terms of which it can be fully understood”.

When defined in these terms, knowing the context of an intervention, and thus, ‘fully understanding’ how it gave rise to its outcomes, is both a highly desirable and an extremely challenging objective for review authors.

A further challenge is that defining ‘context’ is itself a matter of judgement. The ROBINS-I tool for appraisal of non-randomized studies (see Chapter 25) defines context broadly as “characteristics of the healthcare setting (e.g. public outpatient versus hospital outpatient), organizational service structure (e.g. managed care or publicly funded program), geographical setting (e.g. rural vs urban), and cultural setting and the legal environment where the intervention is implemented”.

Pfadenhauer and colleagues concur that the physical and social setting of the intervention needs to be considered as part of the context but, in line with the guidance in Section 17.1.1 on ‘conceptualizing intervention complexity’, expand this understanding to acknowledge the potential for interactions between intervention, participants and the setting within which the intervention is introduced:

Context reflects a set of characteristics and circumstances that consist of active and unique factors, within which the implementation is embedded. As such, context is not [just] a backdrop for implementation, but interacts, influences, modifies and facilitates or constrains the intervention and its implementation. Context is usually considered in relation to an intervention, with which it actively interacts. It is an overarching concept, comprising not only a physical location but also roles, interactions and relationships at multiple levels. (Pfadenhauer et al 2017)

An intervention may be planned as a specific set of procedures to be followed, but careful thought should also be given to implementation. Pfadenhauer and colleagues define intervention implementation as:

an actively planned and deliberately initiated effort with the intention to bring a given intervention into policy and practice within a particular setting. These actions are undertaken by agents who either actively promote the use of the intervention or adopt the newly appraised practices. Usually, a structured implementation process consisting of specific implementation strategies is used being underpinned by an implementation theory. (Pfadenhauer et al 2017)

Important aspects to consider include complexity in implementation (i.e. situations in which we expect the effects of an intervention to be modified by variation in implementation processes from study to study) and complexity in participant responses (i.e. situations in which we expect the effects of an intervention to be modified by variation between the participants receiving an intervention from study to study) (Noyes et al 2013). Sometimes intervention adaptations occur for implementation in different contexts (Evans et al 2019). Some adaptations and their implementation will work and some will not; it may even be possible to compare these different intervention adaptations and their implementations within the systematic review. To understand what has happened, it will be necessary to unpack the intended ‘function’ of the intervention that underlies variations in form.

With most (simple) interventions, integrity is defined as having the ‘dose’ delivered at an optimal level and in the same way in each site. Complex intervention thinking defines integrity of interventions differently. The issue is to allow the form to be adapted while standardising the process and function. (Hawe et al 2004).

Separating what is meant by intervention form as opposed to its function is illustrated by a cluster-randomized trial of a whole-community educational intervention to prevent depression. To maintain the ‘form’ of the intervention across clusters, the evaluators might want to ensure that the same written information was being given to every patient. On the other hand, to ensure that ‘function’ was consistent across clusters, they might want to support each site in devising a way to communicate the intervention which was tailored to “local literacy, language, culture and learning styles” (Hawe et al 2004). In this example, it was necessary to adapt the ‘form’ of part of the original intervention in order to ensure fidelity to its ‘function’ (or mechanism).

It can also be difficult to separate ‘context’ from ‘setting’ and ‘implementation’. For example, variations to context may also be influenced by the types and characteristics of participants receiving and delivering the intervention (and their responses), which may subsequently alter the context or the intervention (Hawe et al 2004).

To understand and explain the anticipated mechanisms of action by which the intervention is expected to work it is advised, when addressing intervention complexity, to have an understanding of the theoretical basis of the intervention (Craig et al 2008). In some situations, there is a relatively well-understood (or perhaps just well-accepted) causal pathway between the intervention and its outcomes. This may derive from basic science – for example, the physiological pathways between specific medical interventions and changes in outcomes. For other more complex situations (such as those in which the intervention interacts with and adapts to its context) such pathways may be less well understood, less predictable and, crucially, non-linear (Petticrew et al 2019). Setting out the theoretical basis at the start of a review can help to clarify initial assumptions (e.g. among evidence users, or among the review team) about how the intervention is expected to work, and through what mechanisms. The results of the systematic review will inform and develop the intervention theory, as well as test its validity. The 2015 MRC guidance on designing complex intervention process evaluations is a helpful resource to inform this stage (Moore et al 2015). Advice is also available on appropriate use of mechanistic reasoning (Howick et al 2010), and on some of its limitations (Howick et al 2013).

To understand how an intervention works requires identifying its individual components and how these exert their effect, either separately or in combination. Further consideration will also need to be given to the implementation context and the processes involved in implementing an intervention (Campbell et al 2000, Craig et al 2008). The implication of this is that the situations in which we expect the effects of an intervention to be modified by variation in the implementation processes may vary from study to study in a review. Further, situations in which we expect the effects of an intervention to be modified by variation between the participants receiving an intervention may also vary from study to study (Noyes et al 2013). Logic models and the use of theory in systematic reviews (Noyes et al 2016b) are described in Section 17.2.1, and elsewhere in the Handbook (see also Chapter 2, Section 2.5.1, Chapter 3, Section 3.2 and Chapter 21, Section 21.6.1.)

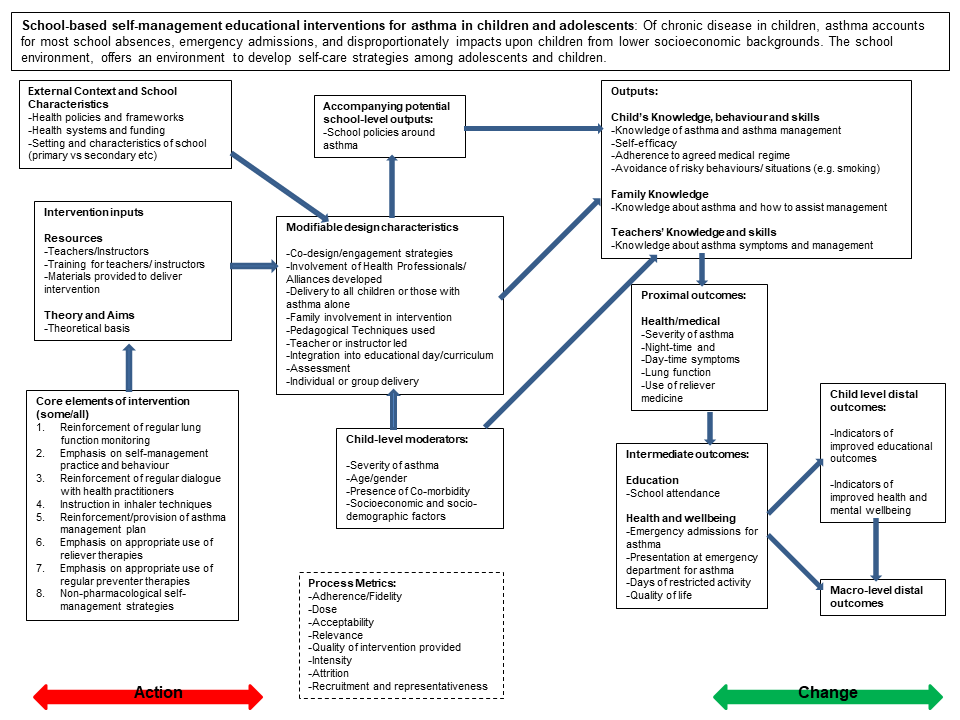

Example review An exemplar multicomponent Cochrane Review of school-based self-management interventions for asthma in children and adolescents is used throughout this chapter to illustrate aspects of complexity and its management in a systematic review (see Box 17.1.a). This review was interested in addressing both intervention effectiveness and understanding how the intervention was implemented, and whether implementation in different groups might explain differences in observed impact. There is a socio-economic gradient in educational impacts due to asthma, with children from lower socio-economic groups and ethnic minorities being more likely than others to report asthma-related hospitalization. One of the reasons for this may be differential effects in school-based self-management interventions. Given that socio-economic inequalities are manifest in the environment, these issues cannot be understood purely in terms of individual participant characteristics, and the review needed to take account of the external context and school characteristics. It did not, however, attempt a ‘full systems’ perspective on the intervention as outlined in Section 17.1.1.

Box 17.1.a A published example of a Cochrane Review assessing a multi-component intervention and how the interpretation of the effectiveness data is enhanced by an additional analysis (Harris et al 2018). Reproduced with permission of John Wiley & Sons

|

School-based self-management interventions for asthma in children and adolescents: a mixed methods systematic review |

|

|

The problem |

Asthma is a common chronic respiratory condition in children characterized by symptoms including wheeze, shortness of breath, chest tightness and cough. Improving the inhaler technique of children with asthma in response to recognizing their worsening symptoms may enable children to manage their condition more effectively. Schools are an opportunity to engage with these children to improve self-management of their asthma care because:

Self-management interventions have multiple components, which vary across studies, so the review needs to consider the combination of intervention components that are associated with successful delivery of the intervention with in the school context. |

|

Participant |

School aged children and young people (5 to 18 years) with asthma who participated in an intervention in their school |

|

Intervention |

School-based asthma self-management programmes |

|

Comparison |

Usual care |

|

Outcome (primary) |

Asthma symptoms or exacerbations leading to admission to hospital |

|

Review questions |

|

|

Types of data |

|

|

Review design and methods used |

|

|

Intervention description and dimensions of complexity |

Self-management is the process of educating and enabling patients to control their asthma symptoms to prevent acute episodes warranting medical intervention. These might include the following intervention, implementation and context aspects: More than one active component included in the intervention delivered across included studies, such as

Usual care: Standard asthma education |

|

Behaviour or actions of intervention recipients or participants to which the intervention is directed: good inhaler technique, being able to recognize and respond to asthma symptoms. |

|

|

Organizational levels in the school context targeted by the intervention: disseminating self-management education through schools to improve school attendance. Health care is managed through the education system, from health policy to school policy on asthma management. |

|

|

The degree of intervention adaptation expected, or flexibility permitted, within the studies across schools applying or implementing the intervention. |

|

|

The level of skill required by those delivering the intervention in order to meet the intervention objectives, such as the knowledge to instruct children in self-management of asthma (e.g. teacher, healthcare practitioner). |

|

|

The level of skill required for the targeted behaviour when entering the included studies by those receiving the intervention, in order to meet the intervention objectives: the child’s capacity to learn. |

|

|

Intervention mechanisms |

How the intervention might work is outlined in the pre-analysis logic model (see Figure 17.2.a) to theorize the causal chain necessary to lead to outcomes of interest from school-based self-management interventions. The post-analysis logic model presents the components of the actual interventions modelled where evidence or impact was observed in the data and where it was not. The model maps moderators, intermediate outcomes, proximal and distal outcomes and notes review gaps. |

|

Results |

Thirty-three studies provided information for the QCA analysis and 33 randomized trials measured the effects of interventions. In summary, the review authors concluded school-based asthma self-management interventions probably reduce hospital admission and may slightly reduce children’s emergency department attendance, although their impact on school attendance could not be measured reliably. They probably reduce the number of days where children experience asthma symptoms, but their effects on asthma-related quality of life are small. Interventions that had a theoretical framework, engaged parents and were run outside of children’s free time were associated with successful implementation. QCA results highlighted the importance of an intervention being theory-driven along with additional factors, such as parental involvement, child satisfaction and running the intervention outside of children’s own time as being drivers of successful implementation. School-based self-management interventions were shown to be likely to reduce mean hospitalizations, reduce unplanned visits to hospitals or primary care, reduce the number of days of restricted activity by just under half a day over a two-week period and may reduce the number of children who visit emergency departments. However, there is insufficient evidence to determine whether requirement of reliever medications is affected by these interventions. See study for further details on results. |

17.1.3 Perspective 3: interventions within complex (adaptive) systems

The systems perspective sees the intervention not as an isolated event or as a package of components, but as a part of, or an ‘event’ within, an interconnected system (Hawe et al 2009). Thus, the intervention interacts with and within a pre-existing system and the review aims to understand the intervention within this wider context, examining how it changes the system, how the system affects the intervention, or both. When doing a review using this perspective, authors not only need to consider the components of the intervention (as in Section 17.1.2), but will also need to define the system within which the intervention is introduced. For example, the introduction of a new vaccine (including its precise timing) in a low- or middle-income country needs to take many factors into account including: supplies of the vaccine (possibly including agreements between governments and international companies); maintenance of the cold chain by upgrading healthcare facilities (e.g. fridges); training of health workers; and delivery of the vaccine through the normal health system as opposed to parallel vaccination systems (e.g. to deliver standard childhood vaccinations). This may have positive or negative impacts on the system as a whole, by using synergies and investing in better infrastructure or human capacities or by over-burdening an already overstretched health system and affecting other services and interventions in unintended (and sometimes unanticipated) ways.

In a systems perspective, complexity arises not only from interactions between components, but also from the relationships and interactions between a system’s agents (e.g. people, or groups that interact with each other and their environment), and its context (Section 17.2.4) (Petticrew et al 2019). One of the implications for systematic reviews is that the intervention itself may be defined very broadly: as a change in a system, or a set of processes, compared to a package of interacting components, or both. Also, reviews taking a systems perspective may aim to answer a wide range of questions about the functioning of the system and how it changes over time, and about the contribution of interventions to those system changes (Garside et al 2010, Petticrew 2015). A full description is beyond the scope of this chapter and the role of complex systems perspectives in systematic reviews is still evolving.

Review authors should refer to Petticrew and colleagues (Petticrew et al 2019) when deciding whether a systems perspective will add value to a review. The following questions should be considered when deciding whether a systems perspective might be helpful.

- What do my review users want to know about? The intervention, the system, or both?

- At what level is the intervention delivered? Is the intervention likely to have anticipated effects of interest to users at levels above the individual level? If the implementation and effects spill over into the family, community, or beyond, then taking a systems perspective may be helpful.

- Is the intervention: (i) a discrete, identifiable intervention, or package of interventions; or (ii) a more diffuse change within an existing system?

Review authors should also take account of the resources available to conduct the review. A large scale, theoretically informed review of an intervention within its wider system may be time-consuming, expensive and require a large multidisciplinary team. It may also produce complex answers that are beyond the needs of many users.

17.1.4 Summary of main points in this section

There are three ways of understanding intervention complexity: (i) in terms of the number of components in the intervention; (ii) in terms of interactions between intervention components or interactions between the intervention and its context, or both; and (iii) in terms of the wider system within which the intervention is introduced. When considering intervention complexity review authors may need to pay particular attention to the intervention’s mechanisms of action, the contexts(s) within which it is introduced, and issues relating to implementation.

A review team should consider which perspective on complexity might be relevant to their review:

- Is the review dealing with interventions comprising multiple components?

- Are interventions of interest likely to interact with the context in which they are implemented, and is intervention adaptation likely to be taking place?

- Which analytical methods will need to be used (e.g. those suitable for modelling interactions and/or non-linear effects)?

- How are the core concepts of mechanisms of action, context and implementation defined?

For further information on logic models and defining interventions see Chapter 2, Section 2.5.1, Chapter 3, Section 3.2 and Chapter 21, Section 21.6.1. See the following for key references on the topics discussed in this section. On understanding intervention complexity: Campbell et al (2000), Craig et al (2008), Kelly et al (2017), Petticrew et al (2019); on mechanisms of action: Howick et al (2010), Fletcher et al (2016), Noyes et al (2016b); on context and implementation: Hawe et al (2009), Noyes et al (2013), Moore et al (2015), Pfadenhauer et al (2017).

17.2 Formulation of the review

Addressing complexity in a review frequently involves asking questions about issues other than effectiveness, such as the following.

- Under what circumstances does the intervention work (Thomas et al 2004, Squires et al 2013)?

- What is the relative importance of, and synergy between, different components of multicomponent interventions?

- What are the mechanisms of action by which the intervention achieves an effect?

- What are the factors that impact on implementation and participant responses?

- What is the feasibility and acceptability of the intervention in different contexts?

- What are the dynamics of the wider system?

Broadly, therefore, systematic reviews can consider complexity in terms of the intervention (e.g. how the components of the intervention interact), and also in terms of how it is implemented. In this situation, systematic reviews can use the concept of complexity to help develop theories of implementation, and inform strategies to improve implementation (Nilsen 2015).

As Chapter 2 and Chapter 3 outline, addressing broader review questions has implications for the search strategy, the types of evidence, the eligibility criteria, the evidence appraisal, and the review design and synthesis methods (Squires et al 2013). Sometimes more than one type of study design may be required to address the questions of interest, the products of which might subsequently be integrated in a third synthesis (see Chapter 21 and Glenton et al (2013), Harris et al (2018).

17.2.1 The role of theory and logic models

As outlined in Chapter 2, review authors should set out in their protocol how they expect the intervention of interest to work. When the causal pathways are well accepted, as they are in many reviews, this can be a relatively straightforward process which simply references the appropriate literature. In reviews where there is a lot of complexity or diversity between interventions, logic models are used to provide schematic representations of causal pathways that illustrate the potential mechanisms of action – and their mediators and moderators – underlying interventions (Anderson et al 2011), as discussed in Chapter 2, Section 2.5.1.

The example Cochrane Review in Box 17.1.a illustrates the benefits of using a logic model with both pre- and post-synthesis versions (Harris et al 2018). Figure 17.2.a presents the pre-synthesis version of the review logic model that starts to model the interventions’ core elements and expected outcomes in changes of behaviour on delivery of the intervention. The model also identifies contextual and individual participant aspects that might modify intervention delivery. The model also introduces the identification of process measures to inform the expected function of the intervention.

Figure 17.2.a Logic model of school-based asthma interventions (Harris et al 2018). Reproduced with permission of John Wiley & Sons

17.2.2 Formulating questions to address intervention complexity

We emphasize the importance of having a clear objective when starting a review, observing that it is often more useful to address questions that seek to identify the circumstances where particular approaches to intervention might be more appropriate than others, rather than simply asking ‘does this intervention work?’ (Higgins et al 2019). Chapter 2 outlines the issues that should be considered when formulating review questions and Petticrew and colleagues outline how to refine review questions through drawing on existing theoretical models, emphasizing it is important to prioritize which contextual factors to examine (Booth et al 2019b, Petticrew et al 2019). In situations of greater intervention complexity, review authors should consider how consumers and other stakeholders might help identify which contextual factors might need detailed examination in the review. It is possible that different groups of people will have quite diverse needs and taking them all into account in a single review may be impossible. Detailed advice is available, however, on ways to engage interested parties in the development of review questions, including formal methods for question prioritization. (See Chapter 2, Section 2.4, and Oliver et al (2017), Booth et al (2019b) for further information on consumer and stakeholder involvement in formulating review questions.) Review authors may find guidance given in Chapter 3 helpful to prioritize which comparisons to examine and, thus, which questions to answer. Sometimes review authors may find that they need to undertake a formal scoping review in order to understand fully how the intervention is defined in the literature (Squires et al 2013).

When considering which aspects of the intervention or its implementation and wider context might be important, review authors should remember that some variation is often inevitable and investigating every conceivable difference will be impossible. In particular, not all aspects of intervention complexity should be detailed in the review question; it may be sufficient to consider these within the logic model and any subgroups identified for synthesis. The review question simply specifies which sources of variation in outcomes will be investigated. In the review example detailed in Box 17.1.a, there were two overall objectives: (1) to identify the intervention features that are aligned with successful intervention implementation; and (2) to assess the effectiveness of school-based interventions for improvement of asthma self-management on children’s outcomes. The ways in which these objectives shaped the review’s eligibility criteria and analytical methods will be described in the following sections.

17.2.3 PICO and complexity

The PICO framework (population, intervention, comparator(s) and outcomes, see Chapter 3) is widely used by systematic review authors to help think through the framing of research questions. The PICO elements may become more complex in reviews where significant intervention complexity is anticipated.

The population considered in a review is commonly described in terms of aspects of a health condition (e.g. patients with osteoporosis) or behaviour (e.g. adolescents who smoke) as well as relevant demographic factors and features of the setting of the study. In complex health and social research that focuses on changes in populations, the definition of a population may be contested. Crucially, populations are not just aggregates of individual characteristics, but social (and physical) relations may also shape population health distributions, as shown in analysis of the spread of obesity through social networks (Christakis and Fowler 2007, Krieger 2012). Review authors are often interested in both the population as a whole, and how the intervention differentially affects different groups within the population (see also Chapter 16 on equity).

With respect to the intervention, the key challenge lies in defining the intervention, for reasons described in detail in the previous sections. When considering intervention complexity, review authors should consider the wide range of ways in which an intervention may be implemented and be wary of excluding primary evaluations of the intervention simply because the form appears different, even if the function is similar (see Section 17.1.2).

The comparisons in the review may require careful consideration. Identifying a suitable comparator can be difficult, particularly where structural interventions, such as taxation, regulation or environmental change, are being evaluated (Blankenship et al 2006), or where each intervention arm is complex. Review authors should be particularly mindful of possible confounding due to systems effects, where wider contextual factors might reduce, or enhance, the effects of an intervention in particular circumstances (see Sections 17.1.1 and 17.1.3). For a detailed discussion of planning comparisons for synthesis, see Chapter 3 and Chapter 9.

Outcomes of interest are likely to include a range of intended and unintended health and non-health effects of interest to review users. The choice of outcomes to prioritize is a matter of judgement and perspective, and the rationale for selection decisions should be explicitly reported. Review authors should note that the prioritization of outcomes varies culturally, and according to the perspective of those directly affected by an intervention (e.g. patients, an at-risk population), those delivering the intervention (e.g. clinicians, staff working for healthcare or public health institutions), or policy makers or others deciding on or financing an intervention and the general public. However, the answer is not simply to include any plausible outcome: a plausible theoretical case can probably be made for most outcomes, but that does not mean they are meaningful. Worse, including a wide range of speculative outcomes raises the risk of data dredging and vastly increases the complexity of the analysis and interpretation (see Chapter 9, Section 9.3.3 on multiplicity of outcomes and Chapter 3, Section 3.2.4.3). Again, an understanding of the intervention theory can help select the outcomes for which the strongest plausible a priori case can be made for inclusion – perhaps those outcomes for which there is prior evidence of an important association with the intervention. As the illustrative logic model (Figure 17.2.a) shows, there can be numerous intermediate outcomes between the intervention and the final outcome of interest. Guidance is available on how to select the most important outcomes from the list of all plausible outcomes (Chapter 3, Section 3.2.4 and Guyatt et al (2011). It will also be important to determine the availability of core outcome sets within the review context (see www.comet-initiative.org). Core outcome sets are now becoming available for more complex interventions and may help to guide outcome selection (e.g. see Kaufman et al (2017).

17.2.4 Addressing context and implementation

One key aspect of intervention complexity is that intervention effects are often strongly context-dependent, with context acting as a moderator of the effect (i.e. influencing its strength or direction) as well as a mediator of the effect (i.e. explaining why an effect is observed). This has implications for judging the wider applicability of review findings when applying GRADE assessment (see Chapter 14). One of the most common challenges is that interventions have different effects in different contexts, and so the review authors will need to take a view (in consultation with review stakeholders and review users) about whether it is more meaningful to restrict the review’s focus to one particular context or setting (e.g. studies carried out in schools, or studies conducted in specific geographical areas), or to include evidence from a range of contexts (a variant of the ‘lumping’ and ‘splitting’ argument (Chapter 2, Section 2.3.2)). For some reviews, understanding how the intervention and its effects change across different contexts is often a key reason for doing the review, and in such cases review authors will need to take account of context in planning and conducting their review. Booth and colleagues provide guidance on how to do this, noting that there are a range of contexts to be considered, including: (i) the context of the review question; (ii) the contexts of the included studies; and (iii) the implementation context into which the findings or recommendations arising from the review are to be introduced (Booth et al 2019a). Note, however, that Cochrane Reviews are rarely written with a specific context in mind although some systematic reviews may be undertaken for a specific setting (see Pantoja et al (2017) for an example of an overview of reviews which examines specifically issues from a low-income country perspective). When a review aims to inform decisions in a specific situation, consideration should be given to the ‘directness’ of the evidence (the extent to which the participants, interventions and outcome measures are similar to those of interest); this is a core feature of GRADE assessment, discussed in Chapter 14 (GRADE Working Group 2004).

The TIDieR framework (Hoffman et al 2014) refers to “the type(s) of location(s) where the intervention occurred, including any necessary infrastructure or relevant features”, and the iCAT_SR tool notes that “the effects of an intervention may be dependent on the societal, political, economic, health systems or environmental context in which the intervention is delivered” (Lewin et al 2017). Finally, the PRECIS-2 tool, while written to support the design of trials, also contains useful information for review authors when considering how to address issues relating to context and implementation (Loudon et al 2015).

These are important considerations because for social and public health (and perhaps any intervention), the political context is often an important determinant of whether interventions can be implemented or not; regulatory interventions (e.g. alcohol or tobacco control policies) may be less politically acceptable within certain jurisdictions, even if such interventions are likely to be effective. Historical and cultural contexts are also often important moderators of the effects and acceptability of public health interventions (Craig et al 2018). It is therefore impossible (and probably misleading) to attempt to specify what ‘is’ or ‘isn’t’ context, as this depends on the intervention and the review question, as well as how the intervention and its effects are theorized (implicitly or explicitly) by the review authors. Booth and colleagues suggest that a supplementary framework (e.g. the Context and Implementation of Complex Interventions (CICI) Framework (Pfadenhauer et al 2017); see Section 17.1.2.1) can help to understand and explore contextual issues: for example, helping to decide whether to ‘lump’ or ‘split’ studies by context, and how to frame the review question and subsequent stages of the review (Booth et al 2019a).

17.2.5 Which types of study address intervention complexity?

As always, the decision about which study designs to include should be led by the review questions, and the ‘fitness for purpose’ of those studies for answering the review question(s) (Tugwell et al 2010). As Chapter 3, Section 3.3 outlines, most Cochrane Reviews focus on synthesizing the results from randomized trials, because of the strength of this study design in establishing a causal relationship between an intervention and its outcome(s). However, as it is not always feasible to conduct randomized trials of all types of intervention (e.g. the ‘structural’ interventions mentioned in Section 17.2.3), it is also accepted that evidence about the effects of interventions, and interactions between components of interventions, may be derived from randomized, quasi-experimental or non-randomized designs (see also Chapter 24). Large-scale and policy-based interventions (such as area-based regeneration programmes) may not be able to use closely comparable control populations, or may not use separate control groups at all, and may use uncontrolled before and after or interrupted time series designs or a range of quasi-experimental approaches. Excluding non-randomized and uncontrolled studies may mean excluding the few evaluations that exist, and in some cases such designs can provide adequate evidence of effect (Craig et al 2012). For example, when evaluating the impact of a smoking ban on hospital admissions for coronary heart disease, Khuder and colleagues employed a quasi-experimental design with interrupted time series (Khuder et al 2007).

As outlined in Section 17.2.2, the questions asked in systematic reviews that address complexity often go beyond asking whether a given intervention works, to ask how it might work, in which circumstances and for whom. Addressing these questions can require the inclusion of a range of different research designs. In particular, when evidence about the processes by which an intervention influences intermediate and final outcomes, as well as evidence on intervention acceptability and implementation, qualitative evidence is often included. Qualitative evidence can also identify evidence of unintended adverse effects which may not be reported in the main quantitative evaluation studies (Thomas and Harden 2008). Petticrew and colleagues’ Table 1 summarizes each aspect of complexity and suggests which types of evidence might be most useful to address each issue. For example, when aiming to understand interactions between intervention and context, multicentre trials with stratified reporting, observational studies which provide evidence of mediators and moderators, and qualitative studies which observe behaviours and ask people about their understandings and experiences are suggested as being helpful study designs to include (Petticrew et al 2019). See also Noyes et al (2019) and Rehfuess et al (2019) for further information on matching study designs to research questions to address intervention complexity.

17.2.6 Summary of main points in this section

In systematic reviews addressing intervention complexity it may be more useful to address questions that seek to identify the circumstances where particular approaches to intervention might be more appropriate, effective and feasible than others, rather than simply asking ‘does this intervention work?’

Logic models represent graphically the way that the intervention is thought to result in its outcomes and the range of interactions between it and its context.

Definitions of population, intervention and outcomes (i.e. the review and comparison PICOs) are sometimes quite broad, and need to consider how interventions and their effects can change across contexts.

Review authors need to consider whether and how to review evidence across multiple contexts, and in particular whether it makes sense, scientifically and practically (in terms of value to decision makers), to integrate them within the same review.

A range of different types of study may be relevant in systematic reviews addressing intervention complexity. Review authors should specify their questions in detail, identifying which types of study are needed for different aspects of their question(s).

Chapter 3 contains detailed information on specifying review and comparison PICOs that is essential reading for review authors addressing intervention complexity. The illustration of a logic model in Figure 17.2.a should be read alongside the introduction to logic models in Chapter 2, Section 2.5.1. See also Chapter 2, Section 2.3 for discussion about breadth and depth in review questions. See the following for key references on the topics discussed in this section. On theory and logic models: Anderson et al (2011), Kneale et al (2015), Rohwer et al (2017); on question formulation: Squires et al (2013), Higgins et al (2019), Petticrew et al (2019); on the TIDieR framework: Hoffman et al (2014); on the iCAT_SR tool: Lewin et al (2017); on the PRECIS-2 tool: Loudon et al (2015); on the CICI framework: Pfadenhauer et al (2017); on which types of study to include: Noyes et al (2019), Petticrew et al (2019), Rehfuess et al (2019).

17.3 Identification of evidence

There is relatively little detailed guidance on searching for evidence to include in reviews that focus on exploring intervention complexity (though see Chapter 4 and associated supplementary information (Noyes et al 2019)). A key challenge is that, as outlined in Sections 17.2.5 and 17.5, such reviews may include a wide range of qualitative and quantitative evidence to answer a range of questions. Searches for information on theory, context, processes and mechanisms (see Section 17.1.2) by which interventions are implemented and outcomes achieved may also be needed.

This requires some consideration of the location of such data sources (e.g. including sources outside the standard health literature), likely study designs, and the role of theory in guiding the review searches and methodological decisions. Policy documents, qualitative data, sources outside the standard health literature and discussion with a knowledgeable advisory group may also provide useful information. Kelly and colleagues outline in more detail the scoping and refining stages that are required for reviews that need to encompass intervention complexity (Kelly et al 2017). Indeed, including a separate ‘mapping’ phase within a systematic review, where a broader search is carried out to understand the extent of research activity, can be a highly valuable additional phase to add into the review process (Gough et al 2012). Some preparatory examination of this evidence may help to determine what form the intervention takes, what levels or structures it is aimed at changing, what its objectives are and how it is expected to bring about change (in effect, what is the underlying logic model). The iCAT_SR tool, which can help with characterizing the main dimensions of intervention complexity can also help here to determine what type of evidence needs to be located (see Box 17.1.a; Lewin et al (2017).

Booth and colleagues provide useful pointers on the value of ‘cluster searching’, which they define as a “systematic attempt, using a variety of search techniques, to identify papers or other research outputs that relate to a single study” (Booth et al 2013). This means that a cluster of studies both directly and indirectly related to a ‘core’ effectiveness study are located to inform, for example, context, acceptability, feasibility and the processes by which the intervention influences the outcomes of interest (Booth et al 2013). Consideration of these issues is often critical for understanding intervention complexity, so review authors need to take account of all relevant information about included studies, even though it may be scattered between multiple publications. Beyond cluster searching, a wider search for qualitative and process evaluation studies that are unrelated to the included trials of interventions may help to create a bigger pool of evidence to synthesize, enabling review authors to address broader aspects such as intervention implementation (Noyes et al 2016a).

While this kind of search can inform the design and framing of the review, a comprehensive search is required to identify as much as possible of the body of evidence relevant to the review (see Chapter 4). As for any review, the search should be led by the review question, a detailed understanding of the PICO elements, and the review’s eligibility criteria (Chapter 3).

17.3.1 Summary of main points in this section

Addressing intervention complexity in systematic reviews may involve searching for evidence on a range of issues other than effectiveness; it may involve searching for evidence on processes, mechanisms and theory.

The identification of relevant evidence should be driven by the review questions, and should consider the ‘fitness for purpose’ of different types of qualitative and quantitative evidence for answering those questions.

For further information see Chapter 4 and also the supplementary information associated with Noyes et al (2019). Table 1 in Petticrew et al (2019) also describes the relationship between different types of review questions, and the sort of evidence that might be sought to answer them. See the following for key references on the topics discussed in this section: Booth et al (2013), Brunton et al (2017).

17.4 Appraisal of evidence

It was noted in Section 17.2.5 that reviews addressing intervention complexity need to be focused on the concept of ‘fitness for purpose’ of evidence – that is, they need to consider what type of evidence is best suited to answer the research question(s). As previously described, these include questions about the implementation, feasibility and acceptability of interventions, and questions about the processes and mechanisms by which interventions bring about change. This has implications for the appraisal of evidence in a systematic review, and appropriate tools should be used for each type of evidence included, assessing the risk of bias for the way in which it is used in each review. When appraising studies that evaluate the effectiveness of an intervention, the Cochrane risk-of-bias tool should be used for trials (Chapter 8) and the ROBINS-I tool for non-randomized study designs (Chapter 25). Chapter 21 contains guidance on evaluating qualitative and implementation evidence.

17.5 Synthesis of evidence

Many useful sources provide further guidance on how to choose an analytic approach that takes account of intervention complexity. This section highlights texts for further reading in terms of which types of questions different methods might enable review authors to answer.

Higgins and colleagues separate synthesis methods into three levels: (i) those that are essentially descriptive, and help to compare and contrast studies and interventions with one another; (ii) those that might be considered ‘standard’ methods of meta-analysis – including meta-regression (see Chapter 10) – which enable review authors to examine possible moderators of effect at the study level; and (iii) more advanced methods, which include network meta-analysis (see Chapter 11), but go beyond this and encompass methods that enable review authors to examine intervention components, mechanisms of action, and complexities of the system into which the intervention is introduced (Higgins et al 2019).

At the outset, even when a statistical synthesis is planned, it is usually useful to begin the synthesis using non-quantitative methods, understanding the characteristics of the populations and interventions included in the review, and reviewing the outcome data from the available studies in a structured way. Informative tables and graphical tools can play an important role in this regard, assisting review authors to visualize and explore complexity. These include harvest plots, box-and-whisker plots, bubble plots, network diagrams and forest plots. See Chapter 9 and Chapter 12 for further discussion of these approaches.

Standard meta-analytic methods may not always be appropriate, since they do depend on reasonable comparability of both interventions and comparators – something that may not apply when synthesizing evidence with high heterogeneity. Chapter 3 considers in detail how to think about the comparability of, and categories within, interventions, populations and outcomes. However, where interventions and populations are judged sufficiently similar to answer questions which aggregate the findings from similar studies, then approaches such as standard meta-analysis, meta-regression or network meta-analysis may be appropriate, particularly when the mechanism of action is clearly understood (Viswanathana et al 2017).

Questions concerning the circumstances in which the intervention might work and the relative importance of different components of interventions require methods that explore between-study heterogeneity. Subgroup analysis and meta-regression enable review authors to investigate effect moderators with the usual caveats that pertain to such observational analyses (see Chapter 10). Caldwell and Welton describe alternative quantitative approaches to synthesis, which include ‘component-based’ meta-analysis where individual intervention components (or meaningful combinations of components) are modelled explicitly, thus enabling review authors to identify those components most (or least) associated with intervention success (Caldwell and Welton 2016).

When the review questions ask review authors to consider how interventions achieve their effect, other types of evidence, other than randomized trials, are vital to provide theory that identifies causal connections between intervention(s) and outcome(s). Logic models (see Section 17.2.1 and Chapter 2) can provide some rationale for the selection of factors to include in analysis, but the review may require an additional synthesis of qualitative evidence to elucidate the complexity adequately. This is especially the case when understanding differential intervention effects that require review authors to consider the perspectives and experiences of those receiving the intervention. See Chapter 21 for a detailed exploration of the methods available. While logic models aim to summarize how the interactions between intervention, participant and context may produce outcomes, specific causal pathways may be identified for testing. Causal chain analysis encompasses a range of methods that help review authors to do this (Kneale et al 2018), including meta-analytic path analysis and structural equation modelling (Tanner-Smith and Grant 2018), and model-based meta-analysis (Becker 2009). These types of analyses are rare in Cochrane Reviews, as methods are still developing and require relatively large datasets.

Integrating different types of data within the same analysis can be a challenging but powerful approach, often enabling the theories generated in synthesis of qualitative literature to be used to explore and explain heterogeneity between quantitative studies (Thomas et al 2004). Reviews with multiple components and analyses can address different questions relating to complexity often in a sequential way, with each component building on the findings of the previous one. Methods used include: mixed-methods synthesis (involving qualitative thematic synthesis, meta-analysis and cross-study synthesis); Bayesian synthesis (where qualitative studies are used to generate informative priors); and qualitative comparative analysis (QCA: a set-based method which uses Boolean algebra to juxtapose intervention components in configurational patterns; see Chapter 21 (Section 21.13) and (Thomas et al 2014)). Such analyses are explanatory analyses, to identify differential intervention effect, and also to explain why it occurs (Cook et al 1994). The example review given in Box 17.1.a is a multi-component review, which integrates different types of data in order better to understand differential intervention effects. It uses qualitative data from process evaluations to identify which intervention features were associated with successful implementation. It then uses the inferences generated in this analysis to explore heterogeneity between the results of randomized trials, using what might be considered ‘standard’ meta-analytic and meta-regression methods. It is important to bear in mind that the review question always comes first in these multi-component reviews: the decision to use process evaluation data in this way was driven by an understanding of the context within which these interventions are implemented. A different mix of data will be appropriate in different situations.

Finally, review authors may want to synthesize research to reach a better understanding of the dynamics of the wider system in which the intervention is introduced. Analytical methods can include some of those already mentioned– for combining diverse types of data – but may also include methods developed in systems science such as systems dynamics models and agent-based modelling (Luke and Stamatakis 2012).

17.5.1 Summary of main points in this section

Methods of synthesis can be understood at three levels: (i) those that help review authors describe studies and understand their similarities and differences; (ii) those that can be used to combine study findings in fairly standard ways; and (iii) more advanced approaches that include network meta-analysis for combining results across different interventions, but also enable review authors to examine intervention components, mechanisms of action and complexities of the system within which the intervention is introduced.

For further information about steps to follow before results are combined, review authors should consider the guidance outlined in Chapter 9 to summarize studies and prepare for synthesis. Standard meta-analytical methods are outlined in Chapter 10, with Section 10.10 on investigating heterogeneity particularly relevant. Methods for undertaking network meta-analysis are outlined in Chapter 11.

17.6 Interpretation of evidence

As with other systematic reviews, reviews with a complexity focus are also aimed at helping decision makers. They therefore need a clear statement of findings and clear conclusions, taking account of the quality of the body of evidence. In this, it is important to refer to Chapter 14 and Chapter 15 and (Montgomery et al 2019) for further guidance on the use of GRADE when assessing intervention effects, and Chapter 21 when using CERQual to consider the confidence in synthesized qualitative findings.

For any review, consideration of how the review findings might apply in different contexts and settings is also important, and probably even more so when addressing intervention complexity. As noted in Section 17.1.3, the effects of an intervention may be significantly moderated by its context, and a review author may be able to describe which are the key aspects of context that the decision maker needs to consider, when deciding whether and how to implement the intervention in their setting. This can be done explicitly in the review by describing different scenarios (see Chapter 3) and by clearly describing the reasons for heterogeneity in results across the studies. One potential risk for reviews with a significant focus on complexity is that every implemention of every intervention can look different (although see the discussion on intervention function and form in Section 17.1.2.1); it is easy for a decision maker to conclude that, because there is no identical intervention or setting to the one in which they are interested, there is no evidence at all. However, as for any other review, it will be helpful to think about whether there are compelling reasons that the evidence from the review cannot be used to inform a new decision. In short, because of complexity (in interventions, and in their implementation) there will always be contextual differences, but this does not render the evidence unusable. Rather, review authors need to consider how this review-level evidence (about the effects of the intervention across different contexts) can be used to inform a new decision. For example, the review can show the range of effect size estimates, or how the types of anticipated and unanticipated outcomes vary, across settings in previous studies, thus giving the decision maker an idea of the range of responses that may be possible, as well as the possible moderating factors, in future implementations.

17.6.1 Reporting guidelines and systematic reviews

Systematic reviews that consider intervention complexity are themselves complex, integrating a wide range of different types of evidence using a range of methods. An extension of the PRISMA reporting guideline for systematic reviews has been developed with specific guidance for reporting the methods and results of ‘complex interventions’ (Guise et al 2017a, Guise et al 2017b), known as PRISMA-CI, which primarily focuses on quantitative evidence and complementing the TIDieR checklist for describing interventions (Hoffman et al 2014). The relevant extended items relate to clearly identifying the review as one covering ‘complex interventions’, providing justification for the specific elements of complexity under consideration in the review, and describing aspects of the complexity of the intervention or its context. The ENTREQ and eMERGe reporting guidelines are for reporting qualitative evidence syntheses and meta-ethnography (Tong et al 2012, France et al 2019). For mixed-method reviews no guidelines currently exist, but Flemming and colleagues suggest a ‘pick and mix’ approach to incorporate the appropriate reporting criteria from existing quantitative and qualitative reporting guidelines (see Chapter 21 for further details) (Flemming et al 2018). One of the challenges that review authors may meet when addressing complexity through incorporating a range of study designs beyond randomized trials is that GRADE assessments of evidence can generally turn out to be ‘low’, offering little assistance to readers in terms of understanding the relative confidence in the different studies included. See (Montgomery et al 2019) for practical advice in this situation.

Increasing the quantity and range of evidence synthesized in a systematic review can make reports quite challenging (and lengthy) to read. Preparing a report that is sufficiently clear in its conclusions can take many rounds of redrafting, and it is also useful to obtain feedback from consumers and other stakeholders involved in the review (Chapter 1, Section 1.3.1). Intervention complexity can thus increase the resources needed at this phase of the review too, and it is essential to plan for this if the reporting of the review is to be sufficiently clear for it to be used to inform decisions. (See also Chapter 15 and online Chapter III.)

17.6.2 Summary of main points in this section

Synopsis It is important (as with any review) to consider decision makers’ needs when conducting a review with a complexity focus. In practice, this means ensuring that there is a clear summary of how the findings vary across different contexts, and setting out the potential implications for decision making.

Involving users in the review process – particularly at the stage of defining the review question(s) – will help with producing a review that meets their needs.

Relevant reporting guidelines should be consulted to ensure that the methods and findings are accurately and transparently reported.

Further information in this Handbook Chapter 2 on question formulation; Chapter 14 and Chapter 15 on completing ‘Summary of findings’ tables, and drawing conclusions. See also Section 17.2.2 of this chapter for information on engagement with key users of the review in formulating its questions.

17.7 Chapter information

Authors: James Thomas, Mark Petticrew, Jane Noyes, Jacqueline Chandler, Eva Rehfuess, Peter Tugwell, Vivian A Welch

Acknowledgements: This chapter replaces Chapter 21 in the first edition of this Handbook (2008) and subsequent version 5.2. We would like to thank the previous chapter authors Rebecca Armstrong, Jodie Doyle, Helen Morgan and Elizabeth Waters.

Funding: VAW holds an Early Researcher Award (2014–2019) from the Ontario Government. JT is supported by the National Institute for Health Research (NIHR) Collaboration for Leadership in Applied Health Research and Care North Thames at Barts Health NHS Trust. The views expressed are those of the author(s) and not necessarily those of the NHS, the NIHR or the Department of Health.

17.8 References

Anderson L, Petticrew M, Rehfuess E, Armstrong R, Ueffing E, Baker P, Francis D, Tugwell P. Using logic models to capture complexity in systematic reviews. Research Synthesis Methods 2011; 2: 33–42.

Becker B. Model-Based Meta-Analysis. In: Cooper H, Hedges L, Valentine J, editors. The Handbook of Research Synthesis and Meta-Analysis. New York (NY): Russell Sage Foundation; 2009. p. 377–395.

Blankenship KM, Friedman SR, Dworkin S, Mantell JE. Structural interventions: concepts, challenges and opportunities for research. Journal of Urban Health 2006; 83: 59-72.

Booth A, Harris J, Croot E, Springett J, Campbell F, Wilkins E. Towards a methodology for cluster searching to provide conceptual and contextual "richness" for systematic reviews of complex interventions: case study (CLUSTER). BMC Medical Research Methodology 2013; 13: 118.

Booth A, Moore G, Flemming K, Garside R, Rollins N, Tuncalp Ö, Noyes J. Taking account of context in systematic reviews and guidelines considering a complexity perspective. BMJ Global Health 2019a; 4: e000840.

Booth A, Noyes J, Flemming K, Moore G, Tuncalp Ö, Shakibazadeh E. Formulating questions to explore complex interventions within qualitative evidence synthesis. BMJ Global Health 2019b; 4: e001107.

Brunton G, Stansfield C, Caird J, Thomas J. Finding Relevant Studies. In: Gough D, Oliver S, Thomas J, editors. An Introduction to Systematic Reviews (2nd ed). London: Sage; 2017.

Caldwell D, Welton N. Approaches for synthesising complex mental health interventions in meta-analysis. Evidence-Based Mental Health 2016; 19: 16-21.

Campbell M, Fitzpatrick R, Haines A, Kinmonth A, Sandercock P, Spiegelhalter D, Tyrer P. Framework for design and evaluation of complex interventions to improve health. BMJ 2000; 321: 694-696.

Christakis N, Fowler J. The Spread of Obesity in a Large Social Network over 32 Years. New England Journal of Medicine 2007; 357: 370-379.

Cook TD, Cooper H, Cordray DS, Hartmann H, Hedges LV, Light RJ, Louis TA, Mosteller F. Meta-Analysis for Explanation: A Casebook. New York (NY): Russell Sage Foundation; 1994.

Craig P, Dieppe P, Macintyre S, Michie S, Petticrew M, Nazareth I. Developing and evaluating complex interventions: the new Medical Research Council guidance. BMJ 2008; 337: a1655.

Craig P, Cooper C, Gunnell D, Haw S, Lawson K, Macintyre S, Ogilvie D, Petticrew M, Reeves B, Sutton M, Thompson S. Using natural experiments to evaluate population health interventions: new MRC guidance. Journal of Epidemiology and Community Health 2012; 66: 1182-1186.

Craig P, Di Ruggiero E, Frohlich K, Mykhalovskiy E, White M, on behalf of the Canadian Institutes of Health Research (CIHR)–National Institute for Health Research (NIHR) Context Guidance Authors Group. Taking account of context in population health intervention research: guidance for producers, users and funders of research. Southampton; 2018.

Evans RE, Craig P, Hoddinott P, Littlecott H, Moore L, Murphy S, O'Cathain A, Pfadenhauer L, Rehfuess E, Segrott J, Moore G. When and how do 'effective' interventions need to be adapted and/or re-evaluated in new contexts? The need for guidance. Journal of Epidemiology and Community Health 2019; 73: 481-482.

Flemming K, Booth A, Hannes K, Cargo M, Noyes J. Cochrane Qualitative and Implementation Methods Group guidance series-paper 6: reporting guidelines for qualitative, implementation, and process evaluation evidence syntheses. Journal of Clinical Epidemiology 2018; 97: 79-85.

Fletcher A, Jamal F, Moore G, Evans RE, Murphy S, Bonell C. Realist complex intervention science: Applying realist principles across all phases of the Medical Research Council framework for developing and evaluating complex interventions. Evaluation (London, England : 1995) 2016; 22: 286-303.

France E, Cunningham M, Ring N, Uny I, Duncan E, Jepson R, Maxwell M, Roberts R, Turley R, Booth A, Britten N, Flemming K, Gallagher I, Garside R, Hannes K, Lewin S, Noblit G, Pope C, Thomas J, Vanstone M, Higginbottom GMA, Noyes J. Improving reporting of Meta-Ethnography: the eMERGe Reporting Guidance. BMC Medical Research Methodology 2019; 19: 25.

Garside R, Pearson M, Hunt H, Moxham T, Anderson R. Identifying the key elements and interactions of a whole system approach to obesity prevention. London: National Institute for Health and Care Excellence; 2010.

Glenton C, Colvin CJ, Carlsen B, Swartz A, Lewin S, Noyes J, Rashidian A. Barriers and facilitators to the implementation of lay health worker programmes to improve access to maternal and child health: qualitative evidence synthesis. Cochrane Database of Systematic Reviews 2013; 10: CD010414.

Gough D, Thomas J, Oliver S. Clarifying differences between review designs and methods. Systematic Reviews 2012; 1: 28.

GRADE Working Group. Grading quality of evidence and strength of recommendations. BMJ 2004; 328: 1490-1494.

Guise JM, Butler ME, Chang C, Viswanathan M, Pigott T, Tugwell P. AHRQ series on complex intervention systematic reviews paper 6: PRISMA-CI extension. Journal of Clinical Epidemiololgy 2017a.

Guise JM, Butler M, Chang C, Viswanathan M, Pigott T, Tugwell P. AHRQ series on complex intervention systematic reviews paper 7: PRISMA-CI elaboration & explanation. Journal of Clinical Epidemiology 2017b.

Guyatt G, Oxman AD, Kunz R, Atkins D, Brozek J, Vist G, Alderson P, Glasziou P, Falck-Ytter Y, Schünemann HJ. GRADE guidelines: 2. Framing the question and deciding on important outcomes. Journal of Clinical Epidemiology 2011; 64: 395–400

Harris K, Kneale D, Lasserson T, McDonald V, Grigg J, Thomas J. School-based self management interventions for asthma in children and adolescents: amixed methods systematic review. Cochrane Database of Systematic Reviews 2018; 6: CD011651.

Hawe P, Shiell A, Riley T. Complex interventions: how "out of control" can a randomised controlled trial be? BMJ 2004; 328: 1561-1563.

Hawe P, Shiell A, Riley T. Theorising interventions as events in systems. American Journal of Community Psychology 2009; 43: 267-276.

Higgins JPT, López-López JA, Becker BJ, Davies SR, Dawson S, Grimshaw JM, McGuinness LA, Moore THM, Rehfuess E, Thomas J, Caldwell DM. Synthesizing quantitative evidence in systematic reviews of complex health interventions. BMJ Global Health 2019; 0: e000858.

Hoffman T, Glasziou P, Boutron I, Milne R, Perera R, Moher D, Altman D, Barbour V, Macdonald H, Johnston M, Lamb S, Dixon-Woods M, McCulloch P, Wyatt J, Chan A-W, Michie S. Better reporting of interventions: template for intervention description and replication (TIDieR) checklist and guide. BMJ 2014; 2014;348:g1687.

Howick J, Glasziou P, Aronson JK. Evidence-based mechanistic reasoning. Journal of the Royal Society of Medicine 2010; 103: 433-441.

Howick J, Glasziou P, Aronson JK. Problems with using mechanisms to solve the problem of extrapolation. Theoretical Medicine and Bioethics 2013; 34: 275-291.

Kaufman J, Ryan R, Glenton C, Lewin S, Bosch-Capblanch X, Cartier Y, Cliff J, Oyo-Ita A, Ames H, Muloliwa AM, Oku A, Rada G, Hill S. Childhood vaccination communication outcomes unpacked and organized in a taxonomy to facilitate core outcome establishment. Journal of Clinical Epidemiology 2017; 84: 173-184.

Kelly M, Noyes J, Kane R, Chang C, Uhl S, Robinson K, Springs S, Butler M, Guise J. AHRQ series on complex intervention systematic reviews-paper 2: defining complexity, formulating scope, and questions. Journal of Clinical Epidemiology 2017; 90: 11-18.

Khuder SA, Milz S, Jordan T, Price J, Silvestri K, Butler P. The impact of a smoking ban on hospital admissions for coronary heart disease. Preventive Medicine 2007; 45: 3-8.

Kneale D, Thomas J, Harris K. Developing and Optimising the Use of Logic Models in Systematic Reviews: Exploring Practice and Good Practice in the Use of Programme Theory in Reviews. PloS One 2015; 10: e0142187.

Kneale D, Thomas J, Bangpan M, Waddington H, Gough D. Conceptualising causal pathways in systematic reviews of international development interventions through adopting a causal chain analysis approach. Journal of Development Effectiveness 2018: DOI: 10.1080/19439342.19432018.11530278.

Krieger N. Who and What Is a “Population”? Historical debates, current controversies, and implications for understanding “Population Health” and rectifying health inequities. Milbank Quarterly 2012; 90: 634–681.

Lewin S, Hendry M, Chandler J, Oxman A, Michie S, Shepperd S, Reeves B, Tugwell P, Hannes K, Rehfuess E, Welch V, Mckenzie J, Burford B, Petkovic J, Anderson L, Harris J, Noyes J. Assessing the complexity of interventions within systematic reviews: development, content and use of a new tool (iCAT_SR). BMC Medical Research Methodology 2017; 17.

Loudon K, Treweek S, Sullivan F, Donnan P, Thorpe KE, Zwarenstein M. The PRECIS-2 tool: designing trials that are fit for purpose. BMJ 2015; 350: h2147.

Luke D, Stamatakis K. Systems science methods in public health: dynamics, networks, and agents. Annual Review of Public Health 2012; 33: 357-376.

Montgomery M, Movsisyan A, Grant S, Macdonald G, Rehfuess EA. Rating the quality of a body of evidence in systematic reviews of complex interventions: Practical considerations for using the GRADE approach in global health research. BMJ Global Health 2019; 4: e000848.

Moore G, Audrey S, Barker M, Bond L, Bonell C, Hardeman W, Moore L, O’Cathain A, Tinati T, Wight D, Baird J. Process evaluation of complex interventions: Medical Research Council guidance. BMJ 2015; 350: h1258.

Nilsen P. Making sense of implementation theories, models and frameworks. Implementation Science 2015; 10: 53.

Noyes J, Gough D, Lewin S, Mayhew A, Welch V. A research and development agenda for systematic reviews that ask complex questions about complex interventions. Journal of Clinical Epidemiology 2013; 66: 1262-1270.

Noyes J, Hendry M, Lewin S, Glenton C, Chandler J, Rashidian A. Qualitative "trial-sibling" studies and "unrelated" qualitative studies contributed to complex intervention reviews. Journal of Clinical Epidemiology 2016a; 74: 133-143.

Noyes J, Hendry M, Booth A, Chandler J, Lewin S, Glenton C, Garside R. Current use was established and Cochrane guidance on selection of social theories for systematic reviews of complex interventions was developed. Journal of Clinical Epidemiology 2016b; 75: 78-92.

Noyes J, Booth A, Moore G, Flemming K, Tuncalp Ö, Shakibazadeh E. Synthesising quantitative and qualitative evidence to inform guidelines on complex interventions: clarifying the purposes, designs and outlining some methods. BMJ Global Health 2019; 4 (Suppl 1): e000893.

Oliver S, Dickson K, Bangpan M, Newman M. Getting started with a review. In: Gough D, Oliver S, Thomas J, editors. An Introduction to Systematic Reviews. London: Sage Publications Ltd.; 2017. p. 71-92.

Pantoja T, Opiyo N, Lewin S, Paulsen E, Ciapponi A, Wiysonge CS, Herrera CA, Rada G, Peñaloza B, Dudley L, Gagnon MP, Garcia Marti S, Oxman AD. Implementation strategies for health systems in low-income countries: an overview of systematic reviews. Cochrane Database of Systematic Reviews 2017; 9: CD011086.

Petticrew M. Time to rethink the systematic review catechism. Systematic Reviews 2015; 4: 1.

Petticrew M, Knai C, Thomas J, Rehfuess E, Noyes J, Gerhardus A, Grimshaw J, Rutter H, McGill E. Implications of a complex systems perspective perspective for systematic reviews and guideline development in health decision-making BMJ Global Health 2019; 4 (Suppl 1): e000899.

Pfadenhauer L, Gerhardus A, Mozygemba K, Bakke Lysdahl K, Booth A, Hofmann B, Wahlster P, Polus S, Burns J, Brereton L, Rehfuess E. Making sense of complexity in context and implementation: the Context and Implementation of Complex Interventions (CICI) framework. Implementation Science 2017; 12: DOI: 10.1186/s13012-13017-10552-13015.

Pigott T, Noyes J, Umscheid CA, Myers E, Morton SC, Fu R, Sanders-Schmidler GD, Devine B, Murad MH, Kelly MP, Fonnesbeck C, Kahwati L, Beretvas SN. AHRQ series on complex intervention systematic reviews-paper 5: advanced analytic methods. Journal of Clinical Epidemiology 2017; 90: 37-42.

Rehfuess EA, Stratil JM, Scheel IB, Portela A, Norris SL, Baltussen R. Integrating WHO norms and values into guideline and other health decisions: the WHO-INTEGRATE evidence to decision framework version 1.0. BMJ Global Health 2019; 4: e000844.

Rohwer A, Pfadenhauer L, Burns J, Brereton L, Gerhardus A, Booth A, Oortwijn W, Rehfuess E. Series: Clinical Epidemiology in South Africa. Paper 3: Logic models help make sense of complexity in systematic reviews and health technology assessments. Journal of Clinical Epidemiology 2017; 83: 37-47.